Python News: Mastering High-Performance Development with Async, FastAPI, and Advanced Profiling

The Python ecosystem is evolving at a breakneck pace, with new libraries, frameworks, and methodologies emerging constantly. For developers aiming to build scalable, resilient, and high-performance applications, staying current with the latest python news and trends isn’t just beneficial—it’s essential. The conversation has shifted from simply writing functional code to engineering systems that can handle massive concurrency, process real-time data streams, and respond with millisecond latency. This requires a modern toolkit and a deep understanding of the underlying principles that drive performance.

This article serves as a technical deep dive into three pivotal areas shaping modern Python development today. We will explore the paradigm shift driven by asynchronous programming for I/O-bound tasks, dissect the meteoric rise of FastAPI for building blazing-fast APIs, and uncover advanced profiling techniques that move beyond simple debugging to pinpoint and eliminate performance bottlenecks. By the end, you’ll have a comprehensive understanding and practical, actionable code examples to leverage these powerful trends in your own projects, ensuring your skills remain sharp and your applications perform at their peak.

The Asynchronous Revolution: Conquering I/O with Modern Python

For years, Python’s Global Interpreter Lock (GIL) was seen as a limitation for concurrent programming. However, the maturation of the asyncio library and the widespread adoption of the async/await syntax have fundamentally changed the landscape for I/O-bound applications. These are applications where the program spends most of its time waiting for external resources like network requests, database queries, or file operations to complete. Asynchronous programming allows a single-threaded, single-process application to handle thousands of concurrent connections efficiently.

Why Async Matters Now More Than Ever

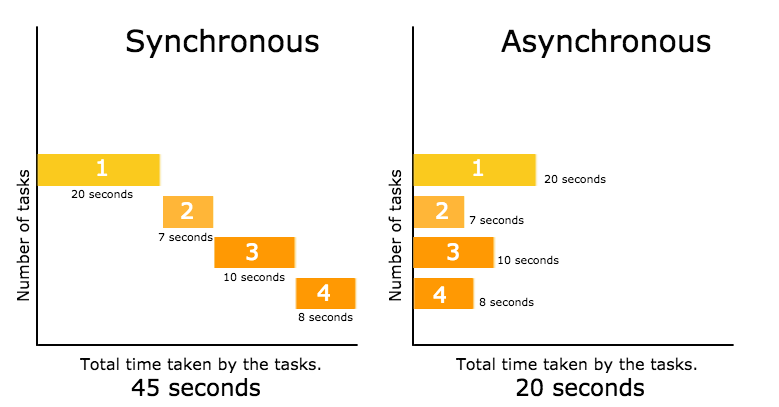

In a traditional synchronous model, when your code makes a network request, the entire thread blocks—it sits idle, waiting for a response. This is incredibly inefficient. Asynchronous programming, through an event loop, allows the program to “pause” a task that is waiting for I/O and switch to another task that is ready to do work. When the I/O operation completes, the event loop schedules the original task to resume where it left off. This cooperative multitasking model leads to a dramatic increase in throughput for applications like web servers, data streaming clients, and API gateways.

Practical Example: High-Speed Data Ingestion with aiokafka

Apache Kafka is a dominant platform for real-time data streaming. While libraries like kafka-python have served the community well, they are synchronous. The new frontier is async-native clients like aiokafka, which can produce and consume messages at incredible speeds without blocking the event loop. This is a game-changer for microservices that need to ingest or process high-volume data streams.

Here’s how you can create a simple, high-performance asynchronous Kafka producer:

import asyncio

from aiokafka import AIOKafkaProducer

async def send_messages():

# It's best practice to configure your Kafka broker endpoint

# via environment variables or a config file.

producer = AIOKafkaProducer(bootstrap_servers='localhost:9092')

# The producer must be started before use.

await producer.start()

try:

# Send 100 messages to the 'my-topic' topic

for i in range(100):

message = f"Message number {i}".encode("utf-8")

print(f"Sending: {message.decode()}")

# send_and_wait() is a coroutine, so we await its completion.

# This ensures the message is acknowledged by the broker.

await producer.send_and_wait("my-topic", message)

await asyncio.sleep(0.1) # Simulate some work

finally:

# Ensure the producer is stopped to clean up connections.

print("Stopping producer...")

await producer.stop()

if __name__ == "__main__":

# Run the main async function

asyncio.run(send_messages())In this example, await producer.send_and_wait(...) pauses the send_messages function until Kafka acknowledges the message, but the Python process itself is free to run other concurrent async tasks if any were scheduled. This is profoundly different from a synchronous call, which would halt the entire thread.

Building Blazing-Fast APIs: The FastAPI Paradigm Shift

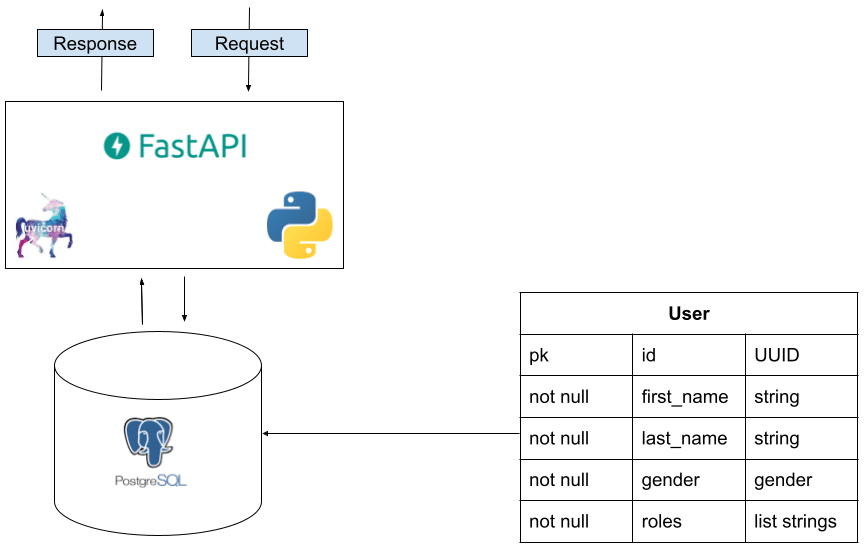

The world of Python web frameworks has been dominated by giants like Django and Flask for over a decade. However, the need for higher performance and better developer ergonomics paved the way for FastAPI, which has seen a meteoric rise in popularity. It’s not just another framework; it represents a new way of thinking about API development in Python.

What Makes FastAPI a Game-Changer?

FastAPI’s success is built on a trifecta of powerful features:

- High Performance: It is built on top of Starlette (an ASGI framework) and Uvicorn (an ASGI server), giving it performance on par with NodeJS and Go for I/O-bound workloads.

- Native Async Support: API endpoint functions can be declared with

async def, allowing you to seamlessly integrate asynchronous libraries for database access (asyncpg,motor) or HTTP requests (httpx). - Automatic Data Validation and Documentation: By leveraging Python type hints and the Pydantic library, FastAPI automatically validates incoming request data, serializes outgoing data, and generates interactive API documentation (Swagger UI and ReDoc). This drastically reduces boilerplate code and bugs.

Practical Example: A High-Performance Data Endpoint

Let’s create a simple API endpoint that accepts user data, validates it, and returns a confirmation. Notice how little code is required to get a fully-featured, high-performance endpoint.

from fastapi import FastAPI

from pydantic import BaseModel, EmailStr

import uvicorn

# Initialize the FastAPI app

app = FastAPI()

# Define a Pydantic model for request body validation.

# This model specifies the structure and types of the expected data.

class User(BaseModel):

username: str

email: EmailStr # Pydantic provides special types for validation

full_name: str | None = None # Optional field with a default of None

# Define an async API endpoint using a path operation decorator

@app.post("/users/")

async def create_user(user: User):

"""

Creates a new user. FastAPI will automatically:

1. Read the JSON body of the POST request.

2. Validate its structure and types against the User model.

3. If invalid, return a 422 Unprocessable Entity error with details.

4. If valid, pass the parsed data as the 'user' argument.

"""

print(f"Creating user: {user.username}")

# In a real application, you would save the user to a database here.

# e.g., await db.create_user(user_data=user.dict())

return {"status": "success", "user_created": user.dict()}

if __name__ == "__main__":

# Run the app with Uvicorn, the lightning-fast ASGI server

uvicorn.run(app, host="0.0.0.0", port=8000)To run this, save it as main.py and execute uvicorn main:app --reload in your terminal. You can then navigate to http://127.0.0.1:8000/docs in your browser to see the interactive API documentation that FastAPI generated automatically.

Integrating with Other Async Libraries

The real power of an async FastAPI endpoint is its ability to call other async services without blocking. Here’s how you might call an external API using the httpx library:

import httpx

from fastapi import FastAPI

app = FastAPI()

@app.get("/external-data/")

async def get_external_data():

# httpx.AsyncClient is used for making async HTTP requests.

async with httpx.AsyncClient() as client:

# The 'await' keyword is crucial here. It allows the event loop

# to work on other tasks while waiting for the API response.

response = await client.get("https://api.publicapis.org/random")

# Raise an exception for bad status codes (4xx or 5xx)

response.raise_for_status()

return response.json()Unlocking Peak Performance: Advanced Profiling and Debugging

Writing fast code is only half the battle; you also need to know how to find out why your code is slow. Relying on print() statements or guesswork is a recipe for frustration and wasted time. Professional developers use profiling tools to get hard data on where their application is spending its time and resources. This is a critical skill, and recent developments in tooling have made it more accessible than ever.

Moving Beyond `print()` Statements

Profiling is the systematic analysis of a program’s performance. The goal is to identify “hot spots” or bottlenecks—sections of code that consume a disproportionate amount of CPU time or memory. Optimizing these specific areas yields the greatest performance gains. Blindly optimizing code without profiling first is known as “premature optimization” and often leads to more complex, less readable code with no tangible performance benefit.

Practical Example: Pinpointing Bottlenecks with `cProfile`

Python comes with a built-in profiler called cProfile. It’s robust and provides detailed statistics on function calls. Let’s create a script with an intentionally inefficient function and see how to profile it.

# file: slow_script.py

import time

def slow_function():

"""A function that does some slow, repetitive work."""

total = 0

for i in range(10**7):

total += i

time.sleep(0.5) # Simulate a slow I/O operation

return total

def fast_function():

"""A function that does quick work."""

return sum(range(100))

def main():

print("Starting script...")

result1 = slow_function()

result2 = fast_function()

print(f"Slow function result: {result1}")

print(f"Fast function result: {result2}")

print("Script finished.")

if __name__ == "__main__":

main()To profile this script, you don’t need to change the code. Simply run it from your terminal using the cProfile module and save the stats to a file:

python -m cProfile -o profile.stats slow_script.py

This command runs the script and dumps the profiling data into profile.stats. This binary file is hard to read on its own. To make sense of it, we use a visualization tool like snakeviz. First, install it (pip install snakeviz), then run:

snakeviz profile.stats

This will open a web page in your browser with an interactive chart showing exactly where the time was spent. You will immediately see that the vast majority of the execution time is consumed by slow_function, specifically within its loop and the time.sleep() call. This data-driven approach tells you precisely where to focus your optimization efforts.

Tying It All Together: Best Practices for Modern Python Development

Leveraging these powerful tools and paradigms requires a shift in mindset. Here are some best practices to help you integrate them effectively into your development workflow.

Embrace Asynchronicity Where It Fits

Async is not a silver bullet. It excels at handling I/O-bound concurrency. For CPU-bound tasks (e.g., complex mathematical calculations, data processing in a tight loop), using the multiprocessing module to leverage multiple CPU cores is still the correct approach. The key is to identify the nature of your bottleneck: are you waiting for data, or are you crunching numbers?

Leverage Type Hinting and Data Validation

The benefits seen in FastAPI with Pydantic extend to all Python code. Using type hints makes your code more readable, easier to reason about, and allows static analysis tools like mypy to catch bugs before you even run the program. Libraries like Pydantic can be used outside of web frameworks to bring robust data validation to any part of your application.

Profile Before You Optimize

This is the golden rule of performance engineering. Your intuition about where a program is slow is often wrong. Always use a profiler like cProfile or a more advanced sampling profiler like py-spy to gather empirical data. This ensures your efforts are focused on the parts of the code that will actually make a difference.

Stay Informed

The world of Python development moves quickly. Keeping up with the latest python news is crucial. Follow influential blogs, subscribe to newsletters like Python Weekly, explore new releases on PyPI, and watch talks from conferences like PyCon. This continuous learning is key to remaining an effective and modern developer.

Conclusion: Your Roadmap to High-Performance Python

The Python landscape is more exciting and powerful than ever before. By mastering the core concepts of asynchronous programming, leveraging modern frameworks like FastAPI, and adopting a data-driven approach to optimization through profiling, you can build applications that are not only functional but also exceptionally performant and scalable. The journey from a good developer to a great one involves embracing these advanced techniques and understanding when and how to apply them.

The key takeaways are clear: use asyncio for I/O-bound concurrency, choose FastAPI for robust and fast API development, and never optimize without data from a profiler. We encourage you to take the code examples from this article, experiment with them, and start integrating these methodologies into your next project. The future of high-performance Python is here, and you now have the roadmap to navigate it successfully.