Riding the Wave: A Developer’s Guide to the Latest Python News in Performance and Asynchronous Programming

The Python ecosystem is evolving at a breakneck pace. For developers, staying current isn’t just a matter of professional development; it’s a strategic necessity for building faster, more scalable, and more resilient applications. The latest python news isn’t about minor version bumps or obscure library updates. Instead, we’re witnessing fundamental shifts in how high-performance systems are designed and implemented. We’re talking about game-changing breakthroughs in asynchronous data streaming with Kafka, revolutionary debugging techniques that can triple code performance, and stunning optimizations in web frameworks like FastAPI that are redefining API development.

This article moves beyond the headlines to provide a deep, technical dive into these pivotal developments. We will explore the core concepts driving this evolution, dissect practical code implementations, and uncover advanced techniques that professional developers can leverage immediately. Whether you’re building real-time data pipelines, developing REST APIs, or optimizing critical business logic, the insights and examples that follow will equip you with the knowledge to harness the full power of modern Python. Prepare to explore the technologies and methodologies that are shaping the future of the language.

The Asynchronous Revolution: Why `async` and `await` Are Dominating Python News

For years, Python’s answer to concurrency was primarily threading, a model famously constrained by the Global Interpreter Lock (GIL). While effective for certain use cases, the GIL prevents multiple native threads from executing Python bytecodes at the same time, creating a significant bottleneck for I/O-bound and CPU-bound tasks within a single process. The latest and most impactful trend in the Python world is the widespread adoption of asynchronous programming via the asyncio library and the native async/await syntax. This paradigm offers a powerful alternative for handling concurrent operations, especially those that spend most of their time waiting for network requests, database queries, or file operations to complete.

From Synchronous to Asynchronous: A Paradigm Shift

In a traditional synchronous model, when a function performs an I/O operation (like fetching a URL), the entire application thread blocks and waits until that operation is finished. If you need to fetch 100 URLs, you must do so one after another, a painfully slow process. Asynchronous programming flips this model on its head. Using an event loop, an async application can initiate an I/O operation, and instead of waiting, it can yield control back to the event loop. The loop can then run other tasks, such as initiating more I/O operations. When the first operation completes, the event loop returns control to the original task to process the result. This cooperative multitasking allows a single thread to manage thousands of concurrent connections efficiently, dramatically improving throughput for I/O-bound workloads.

Practical Example: A Simple Async Web Scraper

Let’s illustrate this with a tangible example. Consider scraping titles from several web pages. A synchronous approach using the popular requests library would fetch each page sequentially. An asynchronous version using aiohttp can send all requests nearly simultaneously.

Here’s how you would build a simple async scraper. Notice the use of async def to define a coroutine, await to pause execution until an I/O operation completes, and asyncio.gather to run multiple coroutines concurrently.

import asyncio

import aiohttp

import time

from bs4 import BeautifulSoup

async def fetch_title(session, url):

"""Asynchronously fetches a URL and extracts its title."""

try:

async with session.get(url, timeout=10) as response:

# Ensure we got a successful response

response.raise_for_status()

html = await response.text()

soup = BeautifulSoup(html, 'html.parser')

return soup.title.string.strip() if soup.title else f"No title found for {url}"

except Exception as e:

return f"Error fetching {url}: {e}"

async def main():

"""Main coroutine to run the scraper."""

urls = [

'https://www.python.org',

'https://www.djangoproject.com',

'https://fastapi.tiangolo.com',

'https://www.realpython.com',

'https://docs.aiohttp.org',

]

start_time = time.time()

async with aiohttp.ClientSession() as session:

# Create a list of tasks to run concurrently

tasks = [fetch_title(session, url) for url in urls]

# Wait for all tasks to complete

titles = await asyncio.gather(*tasks)

for url, title in zip(urls, titles):

print(f"URL: {url}\nTitle: {title}\n")

end_time = time.time()

print(f"Completed in {end_time - start_time:.2f} seconds.")

if __name__ == "__main__":

asyncio.run(main())Running this code will likely take just over the time of the single longest request, whereas a synchronous version would take the sum of all request times. This is the power of non-blocking I/O in action and a core reason why async is at the forefront of Python development.

Implementing High-Performance APIs with FastAPI’s Latest Features

Nowhere is the async revolution more apparent than in web development. While frameworks like Django and Flask remain popular, the big python news in the API world is the meteoric rise of FastAPI. Built from the ground up to be asynchronous, FastAPI leverages modern Python features like type hints and the ASGI (Asynchronous Server Gateway Interface) standard to deliver incredible performance that rivals Node.js and even Go in some benchmarks.

Why FastAPI is a Game-Changer

FastAPI’s brilliance lies in its combination of three key technologies:

- Starlette: A lightweight, high-performance ASGI framework that provides the async web toolkit.

- Pydantic: A powerful data validation library that uses Python type hints to validate, serialize, and deserialize data, automatically generating OpenAPI schemas.

- Asynchronous Native: Path operation functions can be defined with

async def, allowing them to perform non-blocking I/O without tying up a worker process.

This combination provides automatic interactive API documentation (via Swagger UI and ReDoc), dependency injection, and data validation, all while delivering top-tier performance.

Code in Action: Building an Async CRUD Endpoint

Let’s build a simple API endpoint to create an item. This example demonstrates how FastAPI uses Pydantic for request body validation and an async def path operation to simulate a non-blocking database call.

from fastapi import FastAPI

from pydantic import BaseModel, Field

import asyncio

import uuid

# Initialize the FastAPI app

app = FastAPI(

title="Modern API with FastAPI",

description="A demonstration of async endpoints and Pydantic models."

)

# Pydantic model for request body validation

class Item(BaseModel):

name: str = Field(..., min_length=3, max_length=50, description="The name of the item")

price: float = Field(..., gt=0, description="The price must be greater than zero")

is_offer: bool | None = None

# In-memory "database" for demonstration

fake_db = {}

@app.post("/items/", response_model=Item, status_code=201)

async def create_item(item: Item):

"""

Create a new item.

This simulates an async database write operation.

"""

print(f"Received item: {item.name}")

# Simulate a non-blocking database call

await asyncio.sleep(0.5)

item_id = str(uuid.uuid4())

fake_db[item_id] = item.dict()

print(f"Item '{item.name}' created with ID {item_id}")

return item

@app.get("/items/{item_id}")

async def read_item(item_id: str):

"""

Read an item by its ID.

Simulates an async database read.

"""

await asyncio.sleep(0.2)

if item_id in fake_db:

return fake_db[item_id]

return {"error": "Item not found"}In this example, the create_item function is a coroutine. When await asyncio.sleep(0.5) is called, the server isn’t blocked. It can use that half-second to process other incoming requests, leading to much higher concurrency and throughput compared to a traditional synchronous framework.

Common Pitfalls: Mixing Sync and Async

A critical mistake developers make when adopting FastAPI is calling blocking, synchronous code directly within an async def function. For example, using the standard requests.get() library inside an async endpoint will block the entire event loop, freezing the server and defeating the purpose of async. The correct way to handle synchronous code that you cannot replace (e.g., a legacy database driver) is to run it in a separate thread pool using fastapi.concurrency.run_in_threadpool, which allows the event loop to remain unblocked.

Pushing the Boundaries: Kafka Integration and Advanced Performance Profiling

Beyond web APIs, the latest Python developments are revolutionizing data engineering and system observability. High-throughput data streaming and next-generation profiling tools are enabling developers to build and maintain systems at a scale that was previously much more difficult to achieve.

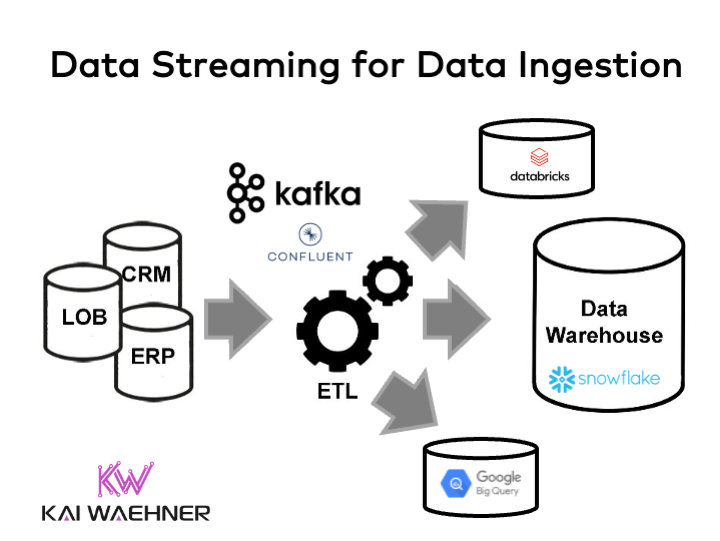

The New Wave of Kafka Integration

Apache Kafka is the de facto standard for building real-time data pipelines. Traditionally, Python’s Kafka libraries were synchronous, meaning a producer would block while waiting for a message to be sent and acknowledged by the Kafka broker. This created a throughput ceiling. The latest python news in this domain is the maturation of async Kafka libraries like aiokafka. By integrating with asyncio, these libraries allow a single Python application to produce or consume messages at an incredible rate. An async producer can fire off a message and, instead of blocking, immediately move on to preparing the next one, handling the broker’s acknowledgment later via the event loop.

Example: An Asynchronous Kafka Producer

Here is a practical example of a high-performance producer using aiokafka. This code starts a producer, sends 100,000 messages to a Kafka topic as quickly as possible, and then gracefully shuts down. This pattern is ideal for applications that need to ingest massive volumes of data in real-time.

import asyncio

from aiokafka import AIOKafkaProducer

import json

import time

async def send_messages():

"""

An async Kafka producer that sends a large number of messages.

"""

producer = AIOKafkaProducer(

bootstrap_servers='localhost:9092',

# Use a JSON serializer for the message value

value_serializer=lambda v: json.dumps(v).encode('utf-8')

)

await producer.start()

print("Producer started...")

start_time = time.time()

num_messages = 100_000

try:

for i in range(num_messages):

message = {'message_id': i, 'content': f'This is message number {i}'}

# The send() method is awaitable but returns a future immediately.

# We can choose not to await each one to send in parallel.

await producer.send('my-topic', value=message)

# Await flush to ensure all messages in the buffer are sent.

await producer.flush()

finally:

print("Stopping producer...")

await producer.stop()

end_time = time.time()

duration = end_time - start_time

print(f"Sent {num_messages} messages in {duration:.2f} seconds.")

print(f"Throughput: {num_messages / duration:.2f} messages/sec.")

if __name__ == "__main__":

# Ensure you have a Kafka broker running on localhost:9092

# and a topic named 'my-topic' created.

asyncio.run(send_messages())A Revolutionary Approach to Debugging: Advanced Profiling

When performance issues arise, traditional profilers like cProfile can be helpful but often introduce significant overhead and struggle to pinpoint issues in complex, I/O-bound applications. The new generation of profiling tools offers a more sophisticated approach.

- Sampling Profilers (

py-spy): These tools work by periodically inspecting the Python process’s call stack from outside the process. This has extremely low overhead and allows you to profile production code without slowing it down. It’s invaluable for finding CPU-bound bottlenecks in running applications. - Memory Profilers (

memray): Developed by Bloomberg, Memray is a powerful memory profiler that can track every allocation in your application. It can generate flame graphs to show you exactly which functions are allocating the most memory, making it an essential tool for debugging memory leaks in long-running services.

These advanced tools provide deep visibility into not just what your code is doing, but how it’s using system resources, enabling optimizations that can boost performance by orders of magnitude.

Best Practices for Harnessing Modern Python

Adopting these new technologies requires a shift in mindset and adherence to a new set of best practices. To truly capitalize on the performance gains offered, developers must be deliberate in their design and implementation choices.

Code Optimization Strategies

First and foremost, embrace the ecosystem. When working in an async context, prioritize using libraries that are async-native. Use aiohttp or httpx instead of requests. Use asyncpg instead of psycopg2 for PostgreSQL. Using a synchronous library in an async application is the most common and damaging performance anti-pattern.

For an extra performance boost in asyncio applications, consider installing uvloop. It is a drop-in replacement for the built-in asyncio event loop, implemented in Cython and built on top of libuv (the same library that powers Node.js). In many benchmarks, it can make asyncio 2-4x faster.

Installing and using it is incredibly simple:

# First, install it: pip install uvloop

import asyncio

import uvloop

# Install the uvloop event loop policy at the start of your application

uvloop.install()

async def main():

print("This application is now running on uvloop!")

await asyncio.sleep(1)

if __name__ == "__main__":

asyncio.run(main())Troubleshooting Common Performance Issues

When troubleshooting, your primary suspect should always be a blocked event loop. If your API feels sluggish despite being async, use logging or a profiler to find any synchronous I/O calls hidden in your async functions. Remember, any function that takes a significant amount of time to run without using `await` is a potential blocker.

For memory leaks, especially in services that run for days or weeks, periodic analysis with a tool like memray is essential. A common source of leaks is creating circular references or storing large objects in global caches without a proper eviction policy.

Finally, to keep up with the rapid pace of change, make it a habit to follow key sources of python news. Subscribe to newsletters like Python Weekly and PyCoders Weekly, follow the official blogs of frameworks like FastAPI, and watch talks from conferences like PyCon. The community is constantly innovating, and staying informed is the best way to keep your skills sharp.

Conclusion: Embracing the Future of Python Development

The Python landscape is in an exciting state of transformation. The convergence of mature asynchronous programming, high-performance web frameworks, and advanced tooling has unlocked new possibilities for developers. We’ve seen how the fundamental shift to an async-first mindset, championed by asyncio, is the engine driving this change. Frameworks like FastAPI have harnessed this power to create a development experience that is both incredibly fast and highly productive.

Furthermore, breakthroughs in specialized domains, such as the async Kafka clients revolutionizing data streaming and next-generation profilers providing unprecedented system visibility, are empowering us to build more robust and scalable systems than ever before. The key takeaway is clear: to build modern, high-performance applications, developers must embrace these new paradigms. Start by experimenting with an async side-project, introduce profiling into your CI/CD pipeline, and stay curious. The future of Python is fast, concurrent, and full of opportunity.