Architecting the Future: System Design for Agentic AI in Python

The Next Frontier: Moving from AI Models to Autonomous Agents

The world of Python development is buzzing with a paradigm shift. For years, the focus has been on training and deploying machine learning models that perform specific, well-defined tasks. Now, a new frontier is capturing the imagination of developers and businesses alike: agentic AI. This evolution represents a move from passive, predictive tools to active, autonomous systems that can reason, plan, and execute complex, multi-step tasks to achieve a goal. This trend is dominating the latest python news and technical discussions, as building these sophisticated systems requires a fundamental rethinking of software architecture.

Unlike a traditional chatbot that follows a simple request-response pattern, an AI agent can interact with its environment, use tools, and maintain a memory of past interactions to inform future decisions. Imagine an agent that can not only answer a question about a company’s quarterly earnings but can also access a database, run a financial analysis script, generate a summary report, and email it to stakeholders. Building such a system isn’t just about prompt engineering; it’s about robust system design. This article delves into the critical architectural patterns, best practices, and common pitfalls of designing agentic AI systems in Python, providing practical code examples to guide you through this exciting new landscape.

Section 1: The Anatomy of an Agentic AI System

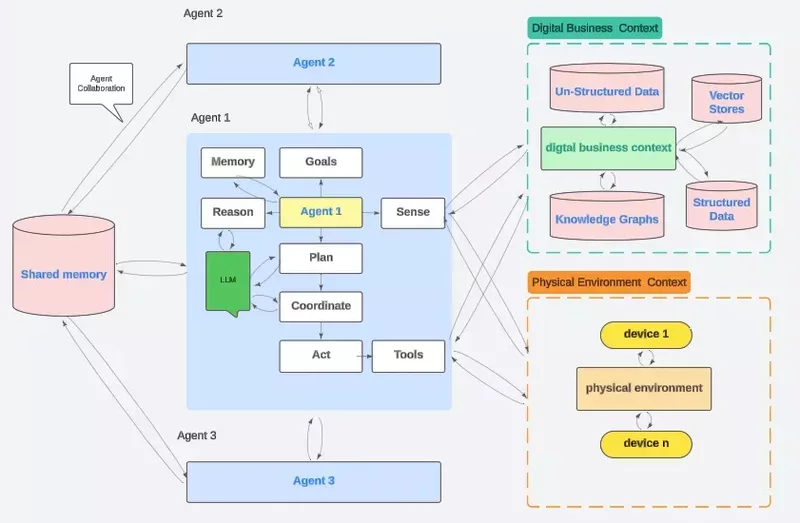

At its core, an agentic system is an orchestrated collection of components working in concert to achieve a goal. Understanding these individual parts is the first step toward designing a cohesive and effective architecture. While frameworks like LangChain and LlamaIndex provide high-level abstractions, a solid grasp of the underlying principles is essential for building custom, production-ready agents.

The Core Components

- The Brain (LLM): This is the central reasoning engine, typically a Large Language Model (LLM) like GPT-4, Claude 3, or an open-source alternative. The brain is responsible for understanding user intent, breaking down complex goals into smaller, manageable steps, and deciding which actions to take next. Its primary function is planning and decision-making, not just text generation.

- Tools and Skills: Agents are only as powerful as the tools they can wield. A “tool” is any function, API, or external service the agent can call to interact with the outside world. This could be anything from a simple calculator function, a web search API, a database query engine, or even another AI model. Defining these tools as discrete, reliable, and well-documented functions is a cornerstone of good agent design.

- Memory: For an agent to perform multi-step tasks, it needs a memory. Memory can be categorized into two types:

- Short-Term Memory: This is the conversational context, holding the history of the current interaction. It allows the agent to remember what was just discussed and maintain a coherent dialogue. This is often managed as a simple buffer of recent messages.

- Long-Term Memory: This is a more persistent form of storage, allowing the agent to recall information across different sessions. This is crucial for personalization and learning over time. Vector databases like Pinecone, Chroma, or Weaviate are commonly used for this, enabling the agent to retrieve relevant information based on semantic similarity.

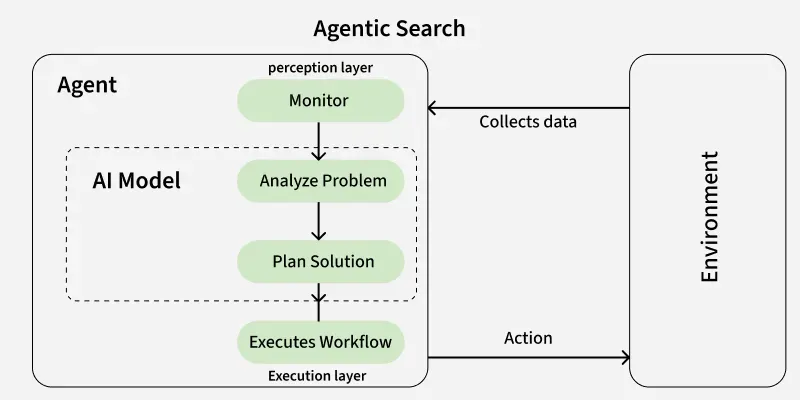

- The Orchestrator (Agentic Loop): This is the heart of the system—the control flow that ties everything together. The orchestrator runs a continuous loop, often based on a pattern like ReAct (Reason, Act). In each cycle, it feeds the current state and goal to the LLM (the brain), which then decides on an action (e.g., use a tool or respond to the user). The orchestrator executes that action, observes the result, updates the state, and repeats the process until the goal is achieved.

Section 2: Architectural Patterns for Scalable Agentic Systems

As you move from a simple prototype to a production system, the architectural choices you make become critically important. The right pattern can mean the difference between a scalable, maintainable system and a brittle, unmanageable one. Two primary architectural patterns emerge in the context of agentic AI: the monolithic agent and the microservices-based agent.

The Monolithic Agent Architecture

In a monolithic architecture, all the components—the orchestrator, tool definitions, and state management—are tightly coupled within a single application or service. This is a common starting point for many projects due to its simplicity.

Characteristics:

- Single Codebase: All logic resides in one place, making it easy to get started and debug in the early stages.

- Direct Function Calls: The orchestrator calls tools directly as Python functions, which is fast and straightforward.

- Simple Deployment: The entire system can be deployed as a single process.

This approach is excellent for proofs-of-concept, internal tools, or agents with a limited and stable set of tools. However, as the complexity grows, it can become difficult to maintain, scale, and update individual components independently.

The Microservices-Based Agent Architecture

For complex, high-stakes applications, a microservices-based architecture offers superior scalability, resilience, and maintainability. In this model, different components of the agentic system are deployed as independent services that communicate over a network (e.g., via REST APIs or gRPC).

Characteristics:

- Decoupled Services: The LLM inference, tool execution, and memory management can each be a separate service. For example, you might have a “Calculator Service,” a “Database Query Service,” and a “Vector Search Service.”

- Independent Scalability: If your database query tool is computationally expensive, you can scale that service independently of the main orchestration service.

- Technology Diversity: Each service can be built with the best technology for its specific job. Your database tool might be in Python, while a high-performance image processing tool could be written in Go or Rust.

- Enhanced Security: Tools can be sandboxed within their own services, limiting the potential blast radius if a tool is compromised or behaves unexpectedly.

This pattern is ideal for enterprise-grade applications where reliability and scalability are paramount. The trade-off is increased operational complexity in terms of deployment, monitoring, and network communication.

Section 3: Practical Implementation in Python: Building the Core Loop

Let’s translate theory into practice by building a simple, monolithic agent in Python. Our agent will be a research assistant whose goal is to answer a question. It will have two tools: a web search tool and a text summarization tool. This example will illustrate the core concepts of tool definition, state management, and the orchestration loop.

Step 1: Defining the Tools

First, we define our tools as simple Python classes. Each tool should have a clear name, a description (for the LLM to understand its purpose), and an `execute` method.

“`python

import time

class WebSearchTool:

“””A tool to search the web for up-to-date information.”””

def __init__(self):

self.name = “web_search”

self.description = “Searches the web for information on a given topic. Input should be a search query.”

def execute(self, query: str) -> str:

print(f”— Executing Web Search with query: ‘{query}’ —“)

# In a real application, this would call a search API like Google or Bing.

# We’ll simulate the API call and result.

time.sleep(1)

return f”Python 3.12 was released in October 2023, introducing improvements like the new ‘type’ statement, more flexible f-string parsing, and performance enhancements through the tier 2 optimizer.”

class SummarizationTool:

“””A tool to summarize a long piece of text.”””

def __init__(self):

self.name = “summarize_text”

self.description = “Summarizes a given block of text into a few key sentences. Input should be the text to summarize.”

def execute(self, text: str) -> str:

print(f”— Executing Summarization with text: ‘{text[:50]}…’ —“)

# This would call an LLM or a library like Hugging Face Transformers.

# We’ll simulate the summarization.

time.sleep(1)

summary = “Python 3.12, released in Oct 2023, offers better performance and new features like the ‘type’ statement.”

return summary

“`

Step 2: The Orchestrator and State Management

Next, we create the orchestrator. This class will manage the agent’s state (the conversation history), hold the available tools, and run the main reasoning loop.

“`python

import json

# A mock LLM function to simulate the brain’s decision-making

def mock_llm_call(prompt: str) -> str:

“””

Simulates a call to an LLM. In a real system, this would be an API call

to OpenAI, Anthropic, or a local model.

It returns a JSON string representing the agent’s next thought and action.

“””

print(“\n>>> LLM is thinking…”)

# Simple rule-based logic for this simulation

if “latest version of Python” in prompt:

return json.dumps({

“thought”: “The user wants to know about the latest Python version. I should use the web_search tool to find this information.”,

“action”: “web_search”,

“action_input”: “latest version of Python release date and features”

})

elif “Python 3.12 was released” in prompt:

return json.dumps({

“thought”: “I have the search results. The text is a bit long, so I should summarize it to give a concise answer.”,

“action”: “summarize_text”,

“action_input”: “Python 3.12 was released in October 2023, introducing improvements like the new ‘type’ statement, more flexible f-string parsing, and performance enhancements through the tier 2 optimizer.”

})

else:

# If we have the summary, the final step is to answer the user

return json.dumps({

“thought”: “I have the summarized information. I can now provide the final answer to the user.”,

“action”: “final_answer”,

“action_input”: “According to my research, Python 3.12 was released in October 2023 and includes performance boosts and new features like the ‘type’ statement.”

})

class AgentOrchestrator:

def __init__(self, tools: list):

self.history = []

self.tools = {tool.name: tool for tool in tools}

def run(self, initial_goal: str):

print(f”Goal: {initial_goal}\n”)

self.history.append(f”USER: {initial_goal}”)

max_turns = 5

for i in range(max_turns):

# 1. Construct the prompt for the LLM

prompt = “\n”.join(self.history)

prompt += “\n\nBased on the conversation history, what is your next thought and action? Respond with a JSON object with ‘thought’, ‘action’, and ‘action_input’.”

# 2. Get the next action from the LLM (our brain)

llm_response_str = mock_llm_call(prompt)

llm_response = json.loads(llm_response_str)

thought = llm_response[‘thought’]

action = llm_response[‘action’]

action_input = llm_response[‘action_input’]

print(f”Thought: {thought}”)

self.history.append(f”AGENT_THOUGHT: {thought}”)

# 3. Execute the action

if action == “final_answer”:

print(f”\nFinal Answer: {action_input}”)

break

elif action in self.tools:

tool_to_use = self.tools[action]

observation = tool_to_use.execute(action_input)

print(f”Observation: {observation}”)

self.history.append(f”OBSERVATION: {observation}”)

else:

print(f”Error: Unknown action ‘{action}’”)

break

else:

print(“Agent reached max turns without a final answer.”)

# — Main execution —

if __name__ == “__main__”:

search_tool = WebSearchTool()

summary_tool = SummarizationTool()

agent = AgentOrchestrator(tools=[search_tool, summary_tool])

agent.run(“What is the latest version of Python and what are its key features?”)

“`

Section 4: Best Practices and Common Pitfalls

![AI agent system design - Why AI Agents are Good System Design [System Design Sundays]](https://python-news.com/wp-content/uploads/2025/12/inline_2573fd90.jpg)

Building robust agentic systems requires discipline and foresight. Adhering to best practices can help you avoid common traps that lead to unreliable and unpredictable agent behavior.

Best Practices for Agentic Design

- Observability is Key: You cannot fix what you cannot see. Implement structured logging for every step of the agent’s reasoning loop. Log the prompts sent to the LLM, the raw responses, the tool being called, its input, and its output. Tools like LangSmith or custom logging dashboards are invaluable for debugging.

- Design Atomic Tools: Tools should be simple, reliable, and do one thing well. A tool that tries to do too much is harder for the LLM to use correctly and harder to debug. Ensure they are idempotent where possible (i.e., calling them multiple times with the same input produces the same result).

- Implement Guardrails and Validation: Never blindly trust the output of an LLM. Before executing a tool, validate the inputs generated by the LLM. For example, if a tool expects a number, ensure you don’t receive a string. For critical actions (like sending an email or modifying a database), consider adding a human-in-the-loop confirmation step.

- Separate Planning from Execution: The LLM should be responsible for high-level planning, not low-level implementation details. The Python code (the tools) should handle the robust execution. This separation of concerns makes the system more modular and reliable.

Common Pitfalls to Avoid

- Brittle Prompting: Relying on a single, complex prompt that tries to control the entire agent’s behavior is a recipe for failure. Instead, use a structured approach where the prompt for each step of the loop is dynamically generated based on the current state and history.

- Ignoring State Management: Forgetting to properly manage the agent’s memory and state is a common mistake. Without a clear history, the agent can get stuck in loops or lose track of the user’s original goal.

- Underestimating Tool-Use Failures: Network APIs fail, databases time out, and functions raise exceptions. Your orchestration loop must have robust error handling. When a tool fails, the agent should be able to observe the error, reason about it, and potentially try a different approach.

- Security Oversights: If an agent can execute code or query databases, it presents a significant security risk. Never allow an agent to execute arbitrary code. Run tools in sandboxed environments (e.g., Docker containers) with limited permissions to prevent prompt injection attacks from causing real-world damage.

Conclusion: Engineering the Future with Python

The rise of agentic AI is one of the most significant developments in the software industry, and it’s a hot topic in current python news for good reason. Python, with its rich ecosystem of data science libraries, web frameworks, and AI tools, is perfectly positioned to be the language of choice for building these intelligent systems. However, success in this new paradigm requires more than just API calls to an LLM. It demands a disciplined approach to system design, focusing on modularity, observability, and security.

By understanding the core components of an agent, choosing the right architectural pattern for your needs, and adhering to engineering best practices, developers can move beyond simple AI-powered features and begin building truly autonomous systems that can solve complex problems. The journey is challenging, but the potential to create transformative applications makes it one of the most exciting fields in technology today.