The Latest in Python News: Crafting Intelligent Game Worlds with AI and Machine Learning

Introduction

In the ever-evolving landscape of software development, Python continues to solidify its position as a dominant force, not just in data science and web development, but increasingly in the complex and creative realm of game development. The latest python news isn’t just about new library releases; it’s about the innovative applications driving the industry forward. One of the most exciting frontiers is the use of Python to build sophisticated Artificial Intelligence (AI) for non-player characters (NPCs) and game systems. Gone are the days of enemies walking in predictable, repetitive patterns. Today’s players expect dynamic, challenging, and believable virtual worlds, and Python is providing developers with the tools to build them. This article explores the journey from fundamental AI concepts to advanced machine learning models, demonstrating how Python can be used to breathe life into game characters and create truly immersive experiences. We will delve into practical code examples, discuss best practices for integration and performance, and provide a roadmap for developers looking to leverage Python’s power to craft the next generation of intelligent game AI.

Section 1: The Foundations of Game AI with Python

Before diving into complex machine learning, it’s crucial to understand the foundational pillars of game AI. These are the time-tested techniques that power the majority of character behaviors in games today. Python’s clean syntax and extensive standard library make it an ideal environment for prototyping and implementing these core logic systems. Its readability allows developers to express complex behaviors clearly, which is invaluable when debugging why an NPC is running into a wall instead of chasing the player.

Finite State Machines (FSMs)

A Finite State Machine is one of the simplest and most common patterns for controlling AI behavior. An FSM consists of a finite number of states, transitions between those states, and actions performed within each state. For example, a simple guard NPC might have states like IDLE, PATROLLING, CHASING, and ATTACKING. The transitions are triggered by in-game events, such as spotting the player or hearing a noise.

Here’s a practical Python implementation of a simple FSM for a game character. This class manages the character’s state and transitions based on its perception of the game world (e.g., player distance).

import random

class CharacterAI:

def __init__(self, name):

self.name = name

self.state = 'IDLE'

self.patrol_points = [(10, 20), (50, 30), (20, 60)]

self.current_patrol_index = 0

print(f"{self.name} the Adventurer awakens! Current state: {self.state}")

def change_state(self, new_state):

if self.state != new_state:

print(f"{self.name} is transitioning from {self.state} to {new_state}.")

self.state = new_state

def update(self, player_distance, player_in_sight):

"""Main update loop to be called each game tick."""

# State: IDLE

if self.state == 'IDLE':

print(f"{self.name} is standing idle, observing the surroundings.")

if random.random() > 0.8:

self.change_state('PATROLLING')

if player_in_sight and player_distance < 15:

self.change_state('CHASING')

# State: PATROLLING

elif self.state == 'PATROLLING':

target_point = self.patrol_points[self.current_patrol_index]

print(f"{self.name} is patrolling towards {target_point}.")

# In a real game, you'd add movement logic here.

# For this example, we'll simulate reaching the point.

if random.random() > 0.6:

print(f"{self.name} reached patrol point {self.current_patrol_index + 1}.")

self.current_patrol_index = (self.current_patrol_index + 1) % len(self.patrol_points)

self.change_state('IDLE')

if player_in_sight and player_distance < 15:

self.change_state('CHASING')

# State: CHASING

elif self.state == 'CHASING':

print(f"{self.name} is chasing the player! Distance: {player_distance}")

if player_distance < 3:

self.change_state('ATTACKING')

elif not player_in_sight or player_distance >= 20:

self.change_state('IDLE')

# State: ATTACKING

elif self.state == 'ATTACKING':

print(f"{self.name} is attacking the player with a mighty swing!")

if player_distance > 3:

self.change_state('CHASING')

# --- Simulation ---

npc = CharacterAI("Goblin Guard")

for tick in range(10):

print(f"\n--- Game Tick {tick+1} ---")

# Simulate changing game conditions

player_is_visible = random.choice([True, False])

distance_to_player = random.randint(1, 25)

print(f"Player visible: {player_is_visible}, Distance: {distance_to_player}")

npc.update(player_distance=distance_to_player, player_in_sight=player_is_visible)

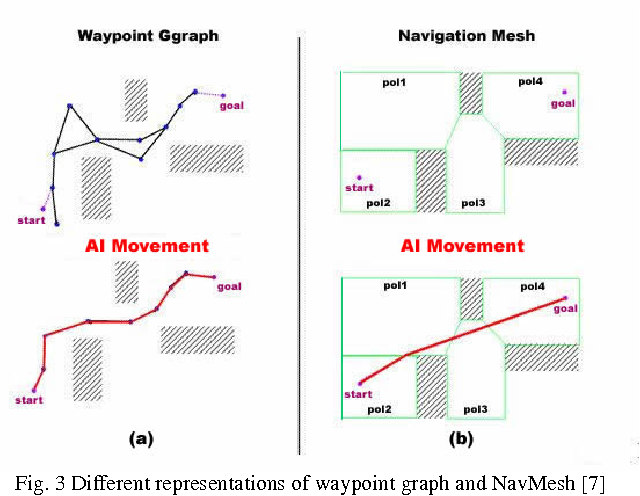

Section 2: Intelligent Movement with Pathfinding Algorithms

An AI that can decide what to do is only half the battle; it also needs to navigate the game world intelligently. This is where pathfinding algorithms come in. A static world with obstacles requires an algorithm that can find an optimal path from point A to point B. The A* (A-star) algorithm is a cornerstone of game development for this very reason. It’s efficient and effective, finding the shortest path by considering both the distance already traveled and a heuristic estimate of the remaining distance.

Implementing A* in Python

Python, with its straightforward data structures like lists and dictionaries, is excellent for implementing algorithms like A*. For better performance in a real-world scenario, a priority queue (available in Python’s `heapq` module) is essential for quickly accessing the node with the lowest cost.

Below is a simplified implementation of A* for a grid-based map. This example demonstrates the core logic of calculating costs, using a heuristic, and reconstructing the final path.

import heapq

class Node:

"""A node class for A* Pathfinding"""

def __init__(self, parent=None, position=None):

self.parent = parent

self.position = position

self.g = 0 # Cost from start to current node

self.h = 0 # Heuristic cost from current node to end

self.f = 0 # Total cost (g + h)

def __eq__(self, other):

return self.position == other.position

def __lt__(self, other):

return self.f < other.f

def astar(grid, start, end):

"""Returns a list of tuples as a path from the given start to the given end in the given grid"""

start_node = Node(None, start)

end_node = Node(None, end)

open_list = []

closed_list = set()

heapq.heappush(open_list, start_node)

while len(open_list) > 0:

current_node = heapq.heappop(open_list)

closed_list.add(current_node.position)

if current_node == end_node:

path = []

current = current_node

while current is not None:

path.append(current.position)

current = current.parent

return path[::-1] # Return reversed path

children = []

for new_position in [(0, -1), (0, 1), (-1, 0), (1, 0)]: # Adjacent squares

node_position = (current_node.position[0] + new_position[0], current_node.position[1] + new_position[1])

# Make sure within range and not an obstacle

if node_position[0] > (len(grid) - 1) or node_position[0] < 0 or \

node_position[1] > (len(grid[0]) - 1) or node_position[1] < 0 or \

grid[node_position[0]][node_position[1]] != 0:

continue

if node_position in closed_list:

continue

new_node = Node(current_node, node_position)

children.append(new_node)

for child in children:

child.g = current_node.g + 1

# Manhattan distance heuristic

child.h = abs(child.position[0] - end_node.position[0]) + abs(child.position[1] - end_node.position[1])

child.f = child.g + child.h

if any(open_node for open_node in open_list if child == open_node and child.g > open_node.g):

continue

heapq.heappush(open_list, child)

# --- Simulation ---

# 0 = walkable, 1 = obstacle

grid_map = [

[0, 0, 0, 0, 1, 0, 0, 0],

[0, 1, 0, 0, 1, 0, 0, 0],

[0, 1, 0, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 1, 1, 1, 1, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0]

]

start_pos = (0, 0)

end_pos = (5, 7)

path = astar(grid_map, start_pos, end_pos)

print(f"Path found from {start_pos} to {end_pos}: {path}")

Section 3: The Next Frontier: Machine Learning for Adaptive AI

While scripted AI like FSMs and pathfinding are powerful, they are inherently predictable. Once a player figures out the pattern, the challenge diminishes. The latest trends in python news for AI development focus on creating agents that can learn and adapt. This is where Machine Learning (ML), specifically Reinforcement Learning (RL), comes into play. In RL, an “agent” (our NPC) learns to make optimal decisions by performing actions in an “environment” (the game world) to maximize a cumulative “reward.”

Introduction to Q-Learning

Q-Learning is a popular model-free RL algorithm. It works by learning a quality function (the “Q” function) for state-action pairs. Essentially, it builds a table (the Q-table) that tells the agent the expected future reward for taking a certain action in a certain state. Through trial and error (exploration), the agent gradually learns which actions lead to the best outcomes.

This approach is perfect for creating AI that can learn complex behaviors that would be difficult to script by hand, such as mastering a mini-game, optimizing combat tactics, or even discovering emergent strategies. Python, with its powerful libraries like NumPy for numerical operations and potentially TensorFlow or PyTorch for more complex models, is the language of choice for RL research and implementation.

import numpy as np

class QLearningAgent:

def __init__(self, n_states, n_actions, learning_rate=0.1, discount_factor=0.9, exploration_rate=1.0, exploration_decay=0.99):

self.n_states = n_states

self.n_actions = n_actions

self.lr = learning_rate

self.gamma = discount_factor

self.epsilon = exploration_rate

self.epsilon_decay = exploration_decay

# Initialize Q-table with zeros

self.q_table = np.zeros((n_states, n_actions))

def choose_action(self, state):

# Exploration vs. Exploitation

if np.random.uniform(0, 1) < self.epsilon:

return np.random.choice(self.n_actions) # Explore: choose a random action

else:

return np.argmax(self.q_table[state, :]) # Exploit: choose the best known action

def learn(self, state, action, reward, next_state):

old_value = self.q_table[state, action]

next_max = np.max(self.q_table[next_state, :])

# Q-learning formula

new_value = (1 - self.lr) * old_value + self.lr * (reward + self.gamma * next_max)

self.q_table[state, action] = new_value

def update_exploration_rate(self):

self.epsilon *= self.epsilon_decay

# --- Simplified Simulation ---

# Imagine a simple game with 4 states (e.g., rooms) and 2 actions (left, right)

# State 3 is the goal with a reward.

agent = QLearningAgent(n_states=4, n_actions=2)

episodes = 500

for episode in range(episodes):

state = 0 # Start in room 0

done = False

while not done:

action = agent.choose_action(state)

# Simulate environment response

if state == 0 and action == 1: next_state = 1

elif state == 1 and action == 1: next_state = 2

elif state == 2 and action == 1: next_state = 3; reward = 10; done = True

else: next_state = state; reward = -1 # Penalty for wrong moves

if not done: reward = -0.1 # Small cost for each step

agent.learn(state, action, reward, next_state)

state = next_state

agent.update_exploration_rate()

print("Training finished.")

print("Q-table:")

print(agent.q_table)

After training, the Q-table will show higher values for actions that lead towards the goal state, effectively encoding a learned strategy for the agent.

Section 4: Integration, Performance, and Best Practices

Creating AI logic in Python is one thing; making it work inside a high-performance game engine is another. Most major game engines like Unreal Engine and Unity are built on C++ and C#, respectively. Therefore, integrating Python-based AI requires careful consideration.

Integration Strategies

- APIs and Sockets: The game engine can run the Python AI script as a separate process and communicate with it over a local network socket or through a REST API. This is flexible but can introduce latency.

- Embedding the Interpreter: Some engines allow for embedding the Python interpreter directly. This offers lower latency but can be more complex to set up and may be constrained by the engine's architecture.

- Transpiling or Rewriting: For performance-critical logic, developers often prototype in Python and then rewrite the final, optimized algorithm in the engine's native language (C++ or C#).

Performance and Pitfalls

Python's primary drawback in gaming is performance. The Global Interpreter Lock (GIL) and its interpreted nature mean that pure Python code can be significantly slower than compiled C++. To mitigate this:

- Use NumPy: For any heavy mathematical or array-based computations (common in AI), use NumPy. It performs operations in highly optimized, pre-compiled C code, bypassing Python's interpreter loop for massive speed gains.

- Profile Your Code: Use Python's built-in profilers like `cProfile` to identify bottlenecks. Don't optimize prematurely; find what's actually slow first.

- Consider Cython: Cython allows you to write Python-like code that compiles down to C, offering performance that can approach native speeds for critical functions.

Recommendations and Ethical Considerations

When developing game AI, it's best to start simple. Use an FSM for basic behaviors and only introduce complex pathfinding or ML where it genuinely enhances the gameplay. Furthermore, when developing AI that interacts with live, commercial games (e.g., creating a bot), it is absolutely critical to review and respect the game's Terms of Service (TOS). Unauthorized automation is often prohibited and can result in account suspension. Always prioritize ethical development and focus on projects that enhance, rather than exploit, the gaming experience.

Conclusion

The latest python news for developers is clear: the language's role in game AI is rapidly expanding. From structuring predictable yet robust behaviors with Finite State Machines to navigating complex environments with A* and enabling adaptive learning with Q-tables, Python provides a powerful, readable, and versatile toolkit. While performance and integration with mainstream engines present challenges, the solutions and strategies available make it an unparalleled tool for AI prototyping and development. By starting with the fundamentals and gradually incorporating more advanced machine learning techniques, developers can leverage Python to create NPCs and game systems that are not just functional, but truly intelligent, dynamic, and memorable. The quest to build the ultimate AI adventurer has never been more accessible, and Python is the perfect language to begin that journey.