PyTorch Monarch: Revolutionizing Distributed Training with a Single-Controller Architecture

The landscape of deep learning infrastructure is undergoing a seismic shift. For years, the standard for distributed training has relied heavily on the Single Program Multiple Data (SPMD) paradigm. While effective, this approach often introduces significant complexity, requiring developers to manage multi-controller setups that can be brittle and difficult to debug. Enter PyTorch Monarch, a groundbreaking framework designed to dismantle these barriers by introducing a single-controller model for distributed programming. This innovation promises to streamline machine learning workflows, making the management of thousands of GPUs as intuitive as running a script on a local laptop.

As the demand for Local LLM deployment and massive Edge AI applications grows, the need for efficient, scalable, and user-friendly distributed systems has never been more critical. Monarch addresses the friction points associated with traditional torch.distributed workflows, such as complex environment variable management and the notorious difficulty of interpreting stack traces across multiple processes. By centralizing control, Monarch allows developers to write code that retains the feel of a single-machine execution while effortlessly scaling across vast hardware meshes.

In this comprehensive guide, we will explore the architecture of PyTorch Monarch, provide practical implementation strategies, and discuss how this framework integrates with the broader Python ecosystem—including PyTorch news, LangChain updates, and modern tooling like the Uv installer and Ruff linter.

Section 1: The Paradigm Shift to Single-Controller Architectures

Understanding the Mesh Concept

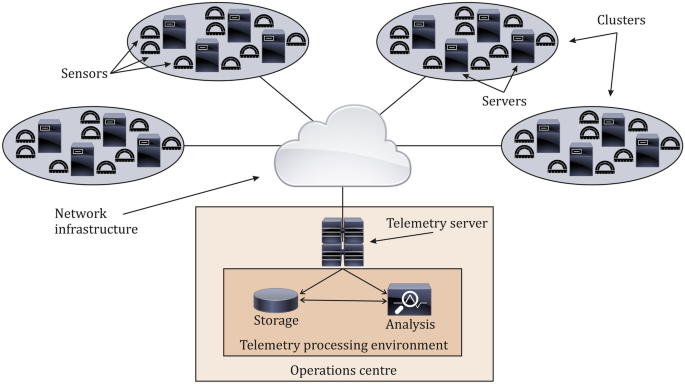

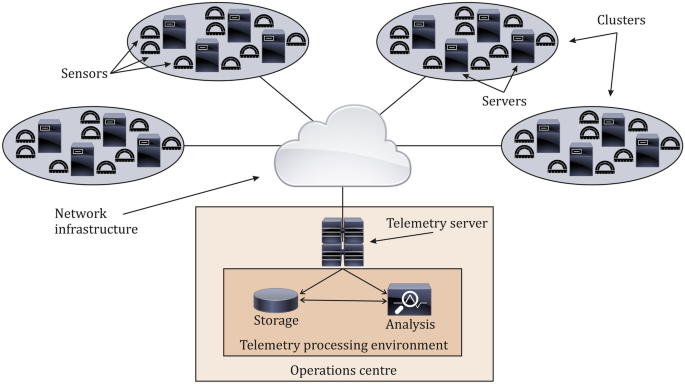

At the heart of Monarch lies the concept of the “Mesh.” In traditional distributed learning (like DDP or FSDP), every GPU runs its own Python process. This often leads to synchronization headaches and makes utilizing tools like Python debugging or standard logging cumbersome. Monarch flips this script by employing a single Python process (the controller) that orchestrates operations across a logical mesh of devices.

This architecture abstracts the physical hardware into a scalable grid. Whether you are running on a single node with 8 GPUs or a cluster with thousands, the code remains largely agnostic to the underlying topology. This aligns with recent CPython internals discussions regarding GIL removal and Free threading, as the controller effectively manages concurrency without the developer needing to manually handle process spawning.

Simplicity in Tensor Operations

One of the most compelling features of Monarch is its ability to perform efficient tensor operations across these meshes. It utilizes a global view of memory. When you create a tensor in Monarch, it may be sharded across multiple devices, but to the developer, it appears as a single object. This is similar to the ease of use found in NumPy news updates, where array manipulation is intuitive, but with the added power of distributed computing.

Let’s look at how one might initialize a Monarch environment and define a device mesh. Unlike the verbose setup of traditional MPI-style launchers, Monarch aims for Pythonic simplicity.

import torch

import monarch

from monarch.distributed import Mesh

def initialize_distributed_environment():

# Define a mesh of devices.

# In a real scenario, this could discover available GPUs automatically.

# This abstraction hides the complexity of rank assignment.

device_mesh = Mesh(

shape=(4, 2), # A 4x2 grid of GPUs

mesh_dim_names=("data_parallel", "model_parallel")

)

print(f"Mesh initialized with {device_mesh.size()} devices.")

# Create a tensor on the controller that is automatically

# sharded across the mesh based on the sharding spec.

local_tensor = torch.randn(1024, 1024)

# Distribute the tensor across the mesh

distributed_tensor = monarch.distribute(

local_tensor,

device_mesh,

sharding_spec=["data_parallel", None] # Shard along the first dimension

)

return distributed_tensor

if __name__ == "__main__":

dt = initialize_distributed_environment()

print(f"Distributed Tensor Shape: {dt.shape}")

# Operations look local but execute globally

result = dt @ dt.T

print("Matrix multiplication complete.")In the code above, notice the absence of rank checks or dist.init_process_group boilerplate. The Type hints provided by modern Python versions help ensure that the sharding specifications align with the mesh dimensions, a practice further encouraged by MyPy updates.

Section 2: Implementation Details and Ecosystem Integration

Data Loading and Preprocessing

A distributed training framework is only as good as its data pipeline. In the era of large datasets, tools like Polars dataframe, DuckDB python, and the Ibis framework have revolutionized data loading speed. Monarch integrates seamlessly with these tools because the controller process can handle data ingestion using standard Python libraries before dispatching batches to the device mesh.

This single-controller view simplifies the integration of PyArrow updates for zero-copy data transfer. Instead of sharding the dataset files manually on disk for each rank, the controller can stream data and distribute it dynamically. This is particularly useful when dealing with Scrapy updates or data harvested via Playwright python and Selenium news for web-scale datasets.

Building a Training Loop

Writing a training loop in Monarch feels remarkably similar to writing a loop for a single GPU. The complexity of gradient synchronization and optimizer stepping is handled by the framework’s backend. Below is an example of a training step, incorporating concepts relevant to Keras updates regarding functional APIs, but applied within the PyTorch Monarch context.

import torch.nn as nn

import torch.optim as optim

import monarch

class SimpleTransformer(nn.Module):

def __init__(self):

super().__init__()

self.fc = nn.Linear(1024, 1024)

self.act = nn.GELU()

def forward(self, x):

return self.act(self.fc(x))

def train_step(mesh, input_data, target):

# Instantiate model on the mesh

# Monarch handles the parameter sharding automatically

model = monarch.module_to_mesh(SimpleTransformer(), mesh)

optimizer = optim.AdamW(model.parameters(), lr=1e-4)

loss_fn = nn.MSELoss()

# The training loop looks standard

optimizer.zero_grad()

# Forward pass: Operations are dispatched to the mesh

output = model(input_data)

loss = loss_fn(output, target)

# Backward pass: Gradients are synchronized across the mesh automatically

loss.backward()

optimizer.step()

return loss.item()

# Mock usage

# Ideally, input_data is already a distributed tensor

# derived from a high-performance loader like DuckDB or Polars

# input_data = ...

# loss = train_step(mesh, input_data, target)This approach significantly lowers the barrier to entry. Developers familiar with Scikit-learn updates or standard PyTorch can transition to distributed training without needing a PhD in high-performance computing. Furthermore, this centralized control logic makes it easier to integrate LlamaIndex news for retrieving context in RAG applications during training, as the retrieval logic can live on the controller.

Section 3: Advanced Techniques: Fault Tolerance and Scaling

Robust Fault Handling

One of the most painful aspects of distributed training is node failure. In a traditional MPI setup, if one GPU fails, the entire job often crashes, requiring a restart from the last checkpoint. Monarch’s single-controller model introduces robust fault handling. Since the controller monitors the mesh, it can detect failures and dynamically reconfigure the mesh resources.

This resilience is crucial for Algo trading and Python finance models where uptime and continuous learning are vital. It also benefits Python automation workflows that run on preemptible cloud instances. Monarch allows for “elastic” training, where the number of devices can change during execution.

Scalable Meshes and Heterogeneous Compute

Monarch isn’t limited to homogeneous clusters. The mesh abstraction allows for advanced scheduling strategies. You could, theoretically, dedicate part of your mesh to data processing (perhaps leveraging Mojo language interoperability for speed) and another part to tensor computation. This flexibility is essential as we see MicroPython updates and CircuitPython news pushing AI to the edge; Monarch could eventually orchestrate hybrid workflows spanning cloud GPUs and edge devices.

Here is an example demonstrating how to handle a potential fault and re-dispatch computation, a pattern essential for long-running jobs.

import time

from monarch.exceptions import DeviceMeshError

def resilient_execution(mesh, data_loader):

chk_point = 0

for i, batch in enumerate(data_loader):

try:

# Attempt to execute the distributed operation

result = perform_heavy_computation(mesh, batch)

chk_point = i

except DeviceMeshError as e:

print(f"Node failure detected at step {i}: {e}")

# Monarch allows healing the mesh

# This might involve requesting a new node or shrinking the mesh

new_mesh = monarch.heal_mesh(mesh)

print("Mesh healed. Resuming computation...")

# Recursive call or loop reset could happen here

mesh = new_mesh

# Re-run the failed batch

result = perform_heavy_computation(mesh, batch)

return "Job Complete"

def perform_heavy_computation(mesh, batch):

# Placeholder for actual distributed matmul or model forward pass

# This simulates work that might timeout or fail on bad hardware

return mesh.execute(lambda x: x * 2, batch)This level of control allows developers to build systems that are as reliable as they are powerful. It brings the reliability of Django async web servers or FastAPI news architectures into the realm of high-performance computing.

Section 4: Best Practices and Optimization

Tooling and Environment Management

To get the most out of PyTorch Monarch, your development environment must be pristine. The Python ecosystem has seen a surge in better package managers. Using the Uv installer or Rye manager can drastically speed up the installation of PyTorch and its dependencies. Alternatively, the PDM manager and Hatch build tools offer excellent dependency resolution.

Security is also paramount. When pulling dependencies for a distributed cluster, ensure you are monitoring PyPI safety and checking for Malware analysis reports on packages. Tools like SonarLint python can be integrated into your IDE to catch code smells before they propagate to the cluster.

Code Quality and Monitoring

Because the controller code is standard Python, you should enforce strict code quality standards. Use Ruff linter for lightning-fast linting and the Black formatter to maintain consistency. For type safety, which is critical when defining mesh shapes and tensor dimensions, keep up with MyPy updates.

For monitoring, consider integrating Marimo notebooks or Taipy news dashboards. These tools can visualize the state of the mesh, memory usage, and loss curves in real-time. Unlike static logs, a dashboard connected to the Monarch controller can provide interactive debugging capabilities.

Future-Proofing with Emerging Tech

As you adopt Monarch, keep an eye on adjacent technologies. Rust Python integrations are making core operations faster. Python quantum computing libraries like Qiskit news are beginning to explore distributed quantum-classical workloads, where a framework like Monarch could serve as the bridge. Furthermore, for web-facing AI applications, frameworks like Reflex app, Flet ui, and PyScript web can be used to build front-ends that communicate with the Monarch controller to trigger training jobs or inference requests.

# Example: Integrating a linter/formatter check in a CI/CD pipeline context

# While not Monarch code specifically, this ensures your distributed scripts are safe.

import subprocess

import sys

def check_code_quality():

files = ["train_monarch.py", "model_def.py"]

print("Running Ruff linter...")

# Ruff is incredibly fast, essential for large codebases

result_ruff = subprocess.run(["ruff", "check"] + files, capture_output=True)

if result_ruff.returncode != 0:

print("Ruff found issues:", result_ruff.stdout.decode())

sys.exit(1)

print("Running MyPy type checker...")

# Type hints save lives in distributed systems

result_mypy = subprocess.run(["mypy"] + files, capture_output=True)

if result_mypy.returncode != 0:

print("Type errors detected:", result_mypy.stdout.decode())

sys.exit(1)

print("Code quality checks passed. Ready for deployment to Mesh.")

if __name__ == "__main__":

check_code_quality()Conclusion

PyTorch Monarch represents a significant leap forward in the democratization of distributed computing. By moving away from complex multi-controller setups to a unified single-controller model, it reduces the cognitive load on developers and opens the door for more robust, scalable, and maintainable machine learning workflows. The ability to manage resources dynamically, coupled with features like scalable meshes and advanced fault handling, ensures that efficient tensor operations are no longer the exclusive domain of tech giants.

As the ecosystem continues to evolve—with LangChain updates enhancing LLM capabilities, Pandas updates improving data handling, and Python security tools maturing—Monarch sits at the intersection of usability and performance. Whether you are building Local LLM solutions, conducting Python testing on massive scales, or exploring Litestar framework for serving models, adopting Monarch could be the key to unlocking the next level of AI productivity.

The future of AI infrastructure is not just about raw speed; it is about the developer experience. With PyTorch Monarch, the community is one step closer to a world where distributed training is as simple as import monarch.