The Evolution of Python Automation: Building Intelligent Agents and High-Performance Workflows

Introduction

Automation has long been the cornerstone of Python’s popularity. From simple file manipulation scripts to complex cron jobs, the language’s readability and vast standard library made it the default choice for system administrators and developers alike. However, the landscape of Python automation is undergoing a radical transformation. We are moving away from brittle, hard-coded scripts toward intelligent, resilient agents capable of reasoning and adapting to change.

The convergence of modern web frameworks, high-performance data tools, and Large Language Models (LLMs) has birthed a new era. Developers are no longer just writing instructions; they are architecting autonomous workflows. With the advent of Edge AI and Local LLM integration, automation can now handle unstructured data and dynamic web environments that previously broke standard scrapers. Furthermore, the ecosystem itself is evolving rapidly with tools like the Uv installer and Rye manager revolutionizing package management, while the impending GIL removal (Global Interpreter Lock) and Free threading capabilities in upcoming Python versions promise to unlock true parallelism.

This article explores the cutting edge of Python automation. We will delve into browser orchestration with Playwright python, high-speed data processing with Polars dataframe, and the integration of AI agents using LangChain updates. Whether you are interested in Algo trading, Python finance, or simply optimizing your daily workflows, mastering these modern tools is essential.

Section 1: Next-Generation Web Automation

For years, Selenium was the de facto standard for web automation. While still relevant (check recent Selenium news), the community is rapidly shifting toward more modern, asynchronous tools. Playwright python has emerged as a powerhouse, offering better reliability, native async support, and the ability to handle modern Single Page Applications (SPAs) with ease. Unlike older tools, Playwright can intercept network requests, handle multiple contexts, and execute faster, making it ideal for complex scraping tasks or testing.

When combined with Scrapy updates, developers can build hybrid architectures: using Scrapy for broad crawling and Playwright for rendering JavaScript-heavy pages. However, the real game-changer is the application of AI to web interaction. Instead of relying on fragile CSS selectors that break whenever a website updates its UI, we can now use visual AI agents to interpret the page structure.

Implementing Robust Browser Automation

Below is an example of using Playwright asynchronously to navigate a dynamic page, wait for specific network conditions, and extract data. This approach is significantly more stable than sleep-based waits.

import asyncio

from playwright.async_api import async_playwright

from typing import List, Dict

async def scrape_dynamic_content(url: str) -> List[Dict[str, str]]:

"""

Navigates to a URL and extracts product data using Playwright.

Demonstrates async/await patterns and robust selector handling.

"""

data_points = []

async with async_playwright() as p:

# Launch browser in headless mode for automation efficiency

browser = await p.chromium.launch(headless=True)

context = await browser.new_context(

user_agent="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

)

page = await context.new_page()

try:

# Go to page and wait for network to be idle (ensures JS is loaded)

await page.goto(url, wait_until="networkidle")

# Wait for a specific element to appear before scraping

await page.wait_for_selector(".product-card", timeout=5000)

products = await page.query_selector_all(".product-card")

for product in products:

title = await product.query_selector(".title")

price = await product.query_selector(".price")

if title and price:

data_points.append({

"title": await title.inner_text(),

"price": await price.inner_text()

})

except Exception as e:

print(f"Error during automation: {e}")

# In a real scenario, integrate with logging or Sentry

finally:

await browser.close()

return data_points

if __name__ == "__main__":

# Run the async loop

result = asyncio.run(scrape_dynamic_content("https://example.com/products"))

print(f"Extracted {len(result)} items.")This script demonstrates the basics, but modern automation often requires bypassing anti-bot measures. Techniques involving Python security research and Malware analysis sandboxes often utilize similar headless browser technologies to inspect malicious URLs safely. By integrating Ruff linter and Black formatter into your development pipeline, you ensure these scripts remain maintainable as they grow in complexity.

Section 2: High-Performance Data Processing

Once your automation script retrieves data, processing it efficiently is the next bottleneck. While Pandas updates continue to improve performance (especially with PyArrow backends), the emergence of Polars dataframe and DuckDB python has redefined what is possible on a single machine. Polars, written in Rust (highlighting the Rust Python trend), offers lazy evaluation and multi-threaded execution that can be orders of magnitude faster than traditional Pandas for large datasets.

For those building data pipelines that need to be backend-agnostic, the Ibis framework allows you to write Python code that compiles to SQL, executing on DuckDB, PostgreSQL, or BigQuery without changing your logic. This is crucial for Algo trading and Python finance applications where latency and data volume are critical factors.

Polars vs. Pandas for Automation Pipelines

Here is how you might process a large dataset scraped from the web using Polars to perform aggregations instantly. This utilizes the PyArrow updates under the hood for zero-copy memory handling.

import polars as pl

import numpy as np

from datetime import datetime, timedelta

def process_financial_data(file_path: str):

"""

Demonstrates high-performance data processing using Polars.

Uses lazy evaluation to optimize query planning before execution.

"""

# Create a LazyFrame - no data is read until .collect() is called

q = (

pl.scan_csv(file_path)

.filter(pl.col("transaction_date") > datetime(2023, 1, 1))

.with_columns([

# vectorized string manipulation

pl.col("ticker").str.to_uppercase().alias("clean_ticker"),

# Calculate volatility using window functions

pl.col("price")

.std()

.over("ticker")

.alias("volatility")

])

.group_by("clean_ticker")

.agg([

pl.col("price").mean().alias("avg_price"),

pl.col("volume").sum().alias("total_volume"),

pl.col("volatility").first().alias("risk_factor")

])

.sort("total_volume", descending=True)

)

# Execute the query graph

# Polars optimizes this plan (predicate pushdown, projection pushdown)

df_result = q.collect()

return df_result

# Example usage with mock data generation

if __name__ == "__main__":

# Generate dummy data for the example

data = {

"transaction_date": [datetime.now() - timedelta(days=i) for i in range(1000)],

"ticker": ["aapl", "googl", "msft", "aapl"] * 250,

"price": np.random.uniform(100, 200, 1000),

"volume": np.random.randint(1000, 5000, 1000)

}

# Save to CSV to simulate an input file

pl.DataFrame(data).write_csv("trades.csv")

# Process

summary = process_financial_data("trades.csv")

print(summary)This approach is vital when automating reports involving millions of rows. The integration of NumPy news and vectorization ensures that Python remains the glue code while optimized C/Rust libraries handle the heavy lifting.

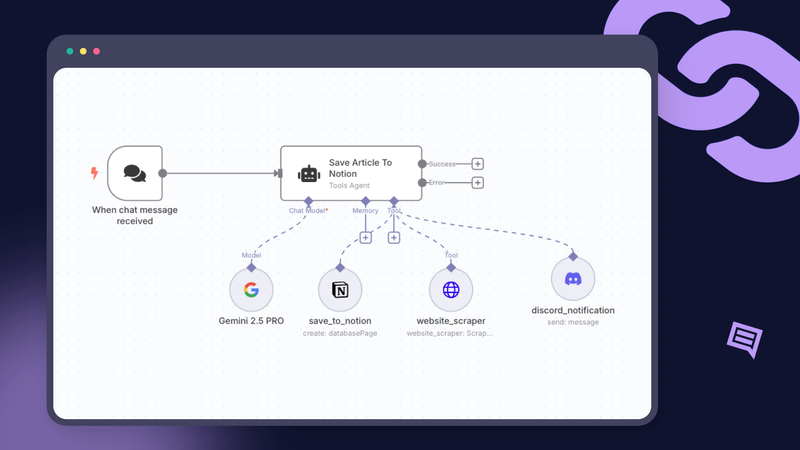

Section 3: The AI-Driven Automation Agent

The most exciting development in Python automation is the integration of Generative AI. We are moving from “scripts” to “agents.” Using LangChain updates or LlamaIndex news, developers can create automation tools that understand natural language. This is particularly useful for parsing unstructured text, summarizing emails, or making decisions based on fuzzy logic.

Imagine an automation workflow that reads customer support tickets. Traditional Regex would fail on typos or slang. An agent powered by a Local LLM (like Llama 3 running via Ollama) can understand sentiment and intent. This brings us closer to the concept of autonomous agents that can browse the web, similar to recent innovations in the open-source community.

Building a Simple Decision Agent

The following example uses a mock LLM interface (representative of LangChain or direct API calls) to parse unstructured log data, a common task in DevOps and Scikit-learn updates pipelines where data cleaning is required.

import json

from typing import Optional

# Mocking an LLM client for demonstration purposes

# In production, you would use: from langchain_openai import ChatOpenAI

class MockLLM:

def predict(self, prompt: str) -> str:

# Simulating an AI response extracting JSON from text

return """

{

"severity": "CRITICAL",

"service": "payment_gateway",

"error_code": "503",

"suggested_action": "restart_service"

}

"""

def analyze_log_entry(log_text: str) -> Optional[dict]:

"""

Uses an LLM to parse unstructured log messages into structured JSON.

This enables automation to react to fuzzy inputs.

"""

llm = MockLLM()

prompt = f"""

You are a DevOps automation agent. Analyze the following log entry.

Extract the severity, service name, error code, and a suggested action.

Return ONLY valid JSON.

Log Entry: "{log_text}"

"""

try:

response = llm.predict(prompt)

structured_data = json.loads(response.strip())

return structured_data

except json.JSONDecodeError:

print("Failed to parse LLM response")

return None

def execute_remediation(action_plan: dict):

"""

Takes the structured decision from the AI and executes Python logic.

"""

if action_plan['severity'] == "CRITICAL":

print(f"⚠️ ALERT: {action_plan['service']} is down!")

print(f"🤖 AI Agent initiating: {action_plan['suggested_action']}...")

# Here you would call subprocess or an API to restart the service

else:

print("Log entry is nominal. No action required.")

if __name__ == "__main__":

raw_log = "Error at 14:00: The payment_gateway refused connection with 503. It seems overloaded."

decision = analyze_log_entry(raw_log)

if decision:

execute_remediation(decision)This pattern is fundamental to modern automation. By decoupling the “understanding” logic (handled by AI) from the “execution” logic (handled by Python), you create systems that are incredibly resilient to change.

Section 4: Modern Tooling, UI, and Best Practices

To build these advanced systems, your development environment must be top-tier. The Python packaging landscape has seen massive improvements. Uv installer is gaining traction for its incredible speed in resolving dependencies, challenging PDM manager and Hatch build. Meanwhile, Rye manager offers an all-in-one experience for managing Python versions and dependencies.

For code quality, Type hints are no longer optional for serious automation projects. Tools like MyPy updates and SonarLint python help catch bugs before runtime. When combined with Ruff linter, your CI/CD pipelines become lightning fast.

User Interfaces for Automation

Automation doesn’t always have to be a headless script. New frameworks allow Python developers to build web UIs for their tools without writing JavaScript. Reflex app, Flet ui, and Taipy news are excellent for creating dashboards to monitor your bots. PyScript web even allows Python to run directly in the browser via WebAssembly.

Advanced Async and Type Safety

Below is an example of a modern, type-safe automation structure using FastAPI news concepts (Pydantic models) and asynchronous execution, which prepares your code for the future of Django async and Free threading.

import asyncio

from pydantic import BaseModel, Field

from typing import List

import random

# Define data models for strict type validation

class AutomationTask(BaseModel):

task_id: str

priority: int = Field(default=1, ge=1, le=5)

payload: dict

class TaskResult(BaseModel):

task_id: str

status: str

processed_data: str

async def process_task(task: AutomationTask) -> TaskResult:

"""

Simulates a heavy I/O bound automation task (e.g., API calls, DB writes).

"""

print(f"Starting task {task.task_id} with priority {task.priority}")

# Simulate network delay

await asyncio.sleep(random.uniform(0.5, 2.0))

# Logic mimicking complex processing

result_str = f"Processed {len(task.payload)} keys"

return TaskResult(

task_id=task.task_id,

status="SUCCESS",

processed_data=result_str

)

async def main_scheduler():

"""

Orchestrates multiple automation tasks concurrently.

"""

tasks = [

AutomationTask(task_id="A1", priority=5, payload={"data": "raw"}),

AutomationTask(task_id="B2", priority=3, payload={"user": "admin"}),

AutomationTask(task_id="C3", priority=1, payload={"config": "update"}),

]

# Run tasks concurrently using asyncio.gather

# This is much more efficient than sequential execution

results = await asyncio.gather(*(process_task(t) for t in tasks))

for res in results:

print(f"Task {res.task_id}: {res.status} -> {res.processed_data}")

if __name__ == "__main__":

asyncio.run(main_scheduler())Section 5: Specialized Domains and Future Tech

Python automation extends far beyond web scraping. in the realm of IoT, MicroPython updates and CircuitPython news allow automation logic to run on microcontrollers, bridging the physical and digital worlds. In the scientific domain, PyTorch news and Keras updates are enabling automated model training pipelines (MLOps), while Qiskit news hints at a future where Python quantum computing automates complex optimization problems.

Furthermore, the development of the Mojo language (a superset of Python) promises C-level performance with Python syntax, which could revolutionize how we write high-frequency automation scripts. Developers using Marimo notebooks are already experiencing a more reactive, reproducible way to prototype these automation flows compared to traditional Jupyter environments.

Conclusion

The landscape of Python automation has matured significantly. It is no longer enough to write a linear script that clicks buttons. Today’s automation engineers must leverage Playwright for robust browser interaction, Polars for high-speed data manipulation, and LangChain to inject intelligence into their workflows.

As we look toward Python 3.13+ and the removal of the GIL, the performance gap between Python and compiled languages narrows, making Python an even more attractive choice for high-concurrency automation. Whether you are automating Python finance models, building Edge AI agents, or simply organizing your files, adopting these modern tools and practices will future-proof your skills and your systems. Start integrating Type hints, explore Async patterns, and don’t be afraid to let AI agents handle the complexity of the modern web.