Beyond Standard Pandas: Scaling Data Workflows with Modin, Polars, and PyArrow

For over a decade, Pandas has been the undisputed king of data manipulation in the Python ecosystem. It provided the intuitive DataFrame abstraction that bridged the gap between academic research and production-grade data science. However, as datasets have grown exponentially—driven by the rise of Edge AI, Local LLM training, and massive IoT sensor networks—the limitations of standard Pandas have become increasingly apparent. The library, historically bound to a single core and eager execution, often struggles with datasets that exceed available RAM or require complex, iterative transformations.

Fortunately, the landscape of Pandas updates and the broader Python data ecosystem has evolved dramatically. We are no longer limited to the traditional constraints of CPython internals. With the advent of Rust Python integrations, memory-efficient backends, and distributed computing libraries, developers can now scale their workflows without rewriting their entire codebase. This article explores how to transcend the limits of vanilla Pandas using modern tools like Modin, the PyArrow backend, and Polars, while also touching upon the critical tooling required to maintain these high-performance environments.

The Evolution of the DataFrame: PyArrow and Pandas 2.0

Before diving into distributed computing, it is essential to understand the architectural shift occurring within Pandas itself. Recent Pandas updates have introduced the ability to use Apache Arrow as the backend memory format. Historically, Pandas relied on NumPy arrays. While NumPy news continues to highlight its importance for numerical computing, the Arrow format offers significant advantages for mixed-type dataframes, particularly regarding string processing and missing value handling.

Memory Efficiency and Speed

The integration of PyArrow updates allows for zero-copy reads and significantly lower memory footprints. This is a crucial step before attempting to parallelize code; often, optimization is better than parallelization. By switching to the Arrow backend, you utilize modern CPU SIMD instructions more effectively.

Furthermore, this shift aligns with the broader move towards strict typing in Python. Utilizing Type hints and tools like MyPy updates ensures that your data pipelines are robust. When combined with the Ruff linter or Black formatter, you create a codebase that is not only fast but maintainable.

import pandas as pd

import numpy as np

import time

# Generating a large dataset to demonstrate backend differences

rows = 1_000_000

data = {

"text_col": ["string_value"] * rows,

"int_col": np.random.randint(0, 100, rows),

"float_col": np.random.rand(rows)

}

# Traditional NumPy-backed DataFrame

df_numpy = pd.DataFrame(data)

print(f"NumPy Backend Memory: {df_numpy.memory_usage(deep=True).sum() / 1024**2:.2f} MB")

# PyArrow-backed DataFrame (Pandas 2.0+)

# Note the configuration of the dtype_backend

df_arrow = pd.DataFrame(data).convert_dtypes(dtype_backend="pyarrow")

print(f"PyArrow Backend Memory: {df_arrow.memory_usage(deep=True).sum() / 1024**2:.2f} MB")

# Performance comparison on string operations

start = time.time()

df_numpy["text_col"].str.upper()

print(f"NumPy String Op: {time.time() - start:.4f}s")

start = time.time()

df_arrow["text_col"].str.upper()

print(f"PyArrow String Op: {time.time() - start:.4f}s")In the example above, the Arrow backend often reduces memory usage for string columns significantly and speeds up string operations, paving the way for more efficient Scikit-learn updates and preprocessing pipelines.

Scaling Instantly with Modin

While backend optimizations are powerful, they don’t solve the single-core limitation of standard Pandas. This is where Modin enters the picture. Modin is designed to be a drop-in replacement for Pandas that parallelizes your workflows across all available CPU cores. It acts as a high-level abstraction layer, utilizing distributed compute engines like Ray or Dask underneath.

The “One-Line” Change

The philosophy of Modin is simple: you shouldn’t have to learn a new API to process big data. If you are working on Python automation scripts or Algo trading backtests, rewriting logic for Spark is often overkill. Modin intercepts Pandas API calls and distributes the partitions of the dataframe across your cores.

This is particularly relevant given the discussions around GIL removal and Free threading in Python 3.13+. While the Global Interpreter Lock has historically hindered thread-based parallelism, Modin bypasses this by using process-based parallelism via its engines. This makes it an excellent tool for heavy ETL jobs, Malware analysis on large logs, or preparing data for Python quantum experiments using Qiskit news libraries.

# Standard Pandas import

# import pandas as pd

# Modin Import - The only change required

import modin.pandas as pd

import modin.config as cfg

# Configure the backend engine (Ray or Dask)

cfg.Engine.put("ray")

# Load a massive CSV

# Modin reads this in parallel, unlike standard Pandas

df = pd.read_csv("large_dataset.csv")

# Perform heavy aggregations

# This operation utilizes all CPU cores

result = df.groupby("category_column").agg({

"sales": "sum",

"profit": "mean",

"inventory": "max"

})

print(result.head())

# Integration with Scikit-Learn

from sklearn.model_selection import train_test_split

# Modin dataframes can often be passed directly or converted efficiently

X = df.drop("target", axis=1)

y = df["target"]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)Modin shines in environments where you have a powerful workstation (e.g., 16+ cores) but are bottlenecked by software. It is also increasingly compatible with Marimo notebooks, a reactive alternative to Jupyter that is gaining traction for reproducible research.

Beyond Pandas: Polars and The Rust Revolution

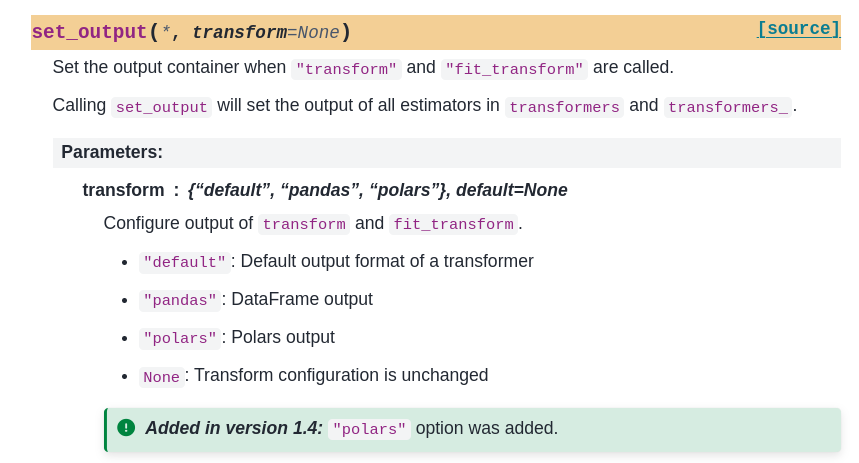

Sometimes, wrapping Pandas is not enough. The Polars dataframe library represents a fundamental rethink of data manipulation. Written in Rust, Polars brings the performance of Rust Python interoperability to the forefront. Unlike Pandas (even with Modin), Polars uses a query optimizer. When you chain operations, Polars doesn’t execute them immediately; it builds a query plan, optimizes it (predicate pushdown, projection pushdown), and then executes it in parallel.

Lazy Execution and Streaming

For developers dealing with datasets larger than RAM—common in PyTorch news regarding dataset preparation or LangChain updates for vector database ingestion—Polars offers a streaming mode. This allows you to process terabytes of data on a laptop, a feat that typically required clusters.

This approach is similar to DuckDB python, which brings OLAP SQL capabilities to local files. In fact, the Ibis framework is emerging as a standard interface to write code once and run it on Polars, DuckDB, or even BigQuery, decoupling the syntax from the execution engine.

import polars as pl

# Create a LazyFrame (does not load data into memory yet)

# This is ideal for files larger than RAM

q = (

pl.scan_csv("massive_log_data.csv")

.filter(pl.col("status_code") == 200)

.group_by("endpoint")

.agg([

pl.col("response_time").mean().alias("avg_latency"),

pl.col("ip_address").n_unique().alias("unique_visitors")

])

.sort("avg_latency", descending=True)

)

# Explain the query plan (shows optimization)

print(q.explain())

# Execute the query and collect the result into memory

# The 'streaming=True' flag enables out-of-core processing

df_result = q.collect(streaming=True)

print(df_result)

# Converting to Pandas/PyArrow if needed for legacy compatibility

pandas_df = df_result.to_pandas(use_pyarrow_extension_array=True)Modern Tooling for High-Performance Data Stacks

Scaling your code is only half the battle; scaling your development environment is the other. The Python packaging landscape has seen massive improvements. To effectively manage libraries like Modin, Ray, and Polars, relying on the standard pip can be slow and prone to dependency hell.

Package Management: Uv, Rye, and PDM

The Uv installer, written in Rust, has revolutionized package installation speeds, making CI/CD pipelines for FastAPI news applications or Django async backends lightning fast. Similarly, the Rye manager and PDM manager offer modern project management experiences similar to Rust’s Cargo or Node’s npm, enforcing lockfiles and deterministic builds. This is critical for Python security, ensuring that no supply chain attacks occur via compromised dependencies.

Quality Assurance and UI

When building data applications, perhaps using Taipy news, Flet ui, or the Reflex app framework for the frontend, code quality is paramount. Integrating SonarLint python into your IDE, along with Pytest plugins for data validation, ensures reliability. For web-based data visualization, PyScript web allows you to run these Python data stacks directly in the browser, opening new avenues for MicroPython updates and CircuitPython news in IoT dashboards.

# Example of a modern data validation pattern using Pydantic

# Useful for validating data before processing with Pandas/Modin

from pydantic import BaseModel, Field, ValidationError

from typing import List, Optional

class SensorReading(BaseModel):

sensor_id: str

timestamp: int

value: float = Field(..., ge=0.0, le=100.0) # Value must be between 0 and 100

location: Optional[str] = None

data_batch = [

{"sensor_id": "A1", "timestamp": 162515, "value": 45.5, "location": "Roof"},

{"sensor_id": "A2", "timestamp": 162516, "value": 150.0, "location": "Basement"}, # Invalid

]

valid_records = []

for record in data_batch:

try:

reading = SensorReading(**record)

valid_records.append(reading.model_dump())

except ValidationError as e:

print(f"Skipping invalid record: {e}")

# Convert validated data to DataFrame

import pandas as pd

df_clean = pd.DataFrame(valid_records)

print(df_clean)Best Practices and Optimization Strategies

Adopting these advanced tools requires a shift in mindset. Here are key strategies to ensure success:

- Profile Before You Scale: Don’t jump to Modin or Polars immediately. Use profiling tools to see if your bottleneck is I/O or CPU. Sometimes a simple Keras updates data loader optimization or using Hatch build scripts to compile C-extensions is enough.

- Format Awareness: Prefer Parquet or Feather (Arrow) over CSV. The read/write speed difference is massive and supports the LlamaIndex news trend of efficient data retrieval for RAG systems.

- Environment Isolation: Use Rye manager or Uv installer to keep your data science environments clean. Dependency conflicts between TensorFlow, PyTorch, and Ray are common and painful without strict management.

- Testing: Implement property-based testing using Hypothesis (often discussed in Pytest plugins circles) to verify that your parallelized Modin logic returns the same results as standard Pandas.

Conclusion

The era of single-threaded data analysis is drawing to a close. With Pandas updates embracing PyArrow, and tools like Modin providing seamless scaling, Python remains the dominant force in data engineering. However, the ecosystem is expanding. Competitors like Polars and the emergence of the Mojo language suggest a future where performance is default, not an afterthought.

Whether you are scraping data with Scrapy updates and Playwright python, automating browser tasks with Selenium news, or building complex Python finance models, the key to scalability lies in choosing the right abstraction. By leveraging Modin for immediate gains and Polars for architectural efficiency, you can handle the data demands of the AI age with confidence. As GIL removal progresses in future Python versions, we can expect these tools to become even more efficient, further cementing Python’s role in high-performance computing.