Stop Rewriting Your Pandas Code for Spark. Seriously.

I looked at my terminal yesterday and saw the one error message that has haunted my entire career in data engineering.

MemoryError: Unable to allocate 64.0 GiB for an array with shape...

We’ve all been there. You write a beautiful, clean analysis in Pandas on a sample dataset. It works perfectly. The logic is sound, the vectorization is tight, and the results make sense. Then you try to run it on the full production dataset, and your laptop—or even your beefy EC2 instance—just chokes. Dead.

Historically, this was the moment I’d sigh, crack my knuckles, and start the “Spark Tax” process. You know the drill. Rewrite everything in PySpark. Deal with lazy evaluation weirdness. Debug cryptic JVM stack traces that have no business appearing in a Python script. Spin up a cluster that costs more per hour than my first car.

But something shifted this week. If you haven’t been watching the open source channels closely, you might have missed it, but Bodo just dropped a massive update that effectively makes their compiler technology open source. And honestly? It’s about time.

Why I’m Tired of “Distributed” Frameworks

Look, I respect Dask. I respect Ray. They’re good tools. But they usually require you to learn a new API or at least think about partitions. “Here’s a Dask DataFrame, it acts like a Pandas DataFrame, except when it doesn’t, and also apply is expensive, and good luck with that shuffle.”

The promise of Bodo has always been different: Don’t rewrite anything.

The idea is that you take your standard, imperative NumPy/Pandas code, and their compiler (based on Numba/LLVM) analyzes it, infers the types, and turns it into highly optimized MPI binaries. It’s basically bringing High Performance Computing (HPC) tech—the stuff supercomputers use—to those of us who just want to group by a column without crashing our RAM.

With the news dropping this week (Jan 2026) that the core engine is opening up, the barrier to entry just vanished. No sales calls. No enterprise licenses just to test if it works. You just pip install and go.

The Speed Claims are… Aggressive

I’m naturally skeptical when I see benchmarks. Everyone’s benchmark is tailored to make their tool look like a Ferrari and the competitor look like a unicycle. But the numbers floating around right now are claiming 20x to 200x speedups over Spark and Dask.

Two hundred times? That sounds fake. But having messed around with it for a few hours last night, I’m starting to see where that number comes from. It’s not magic; it’s compilation.

Spark is great, but overhead is its middle name. Serialization, the JVM bridge, the scheduler latency—it adds up. Bodo compiles your Python directly to machine code. It manages memory manually (well, the compiler does). It uses MPI for communication, which is brutally efficient compared to the HTTP/RPC chatter other frameworks use.

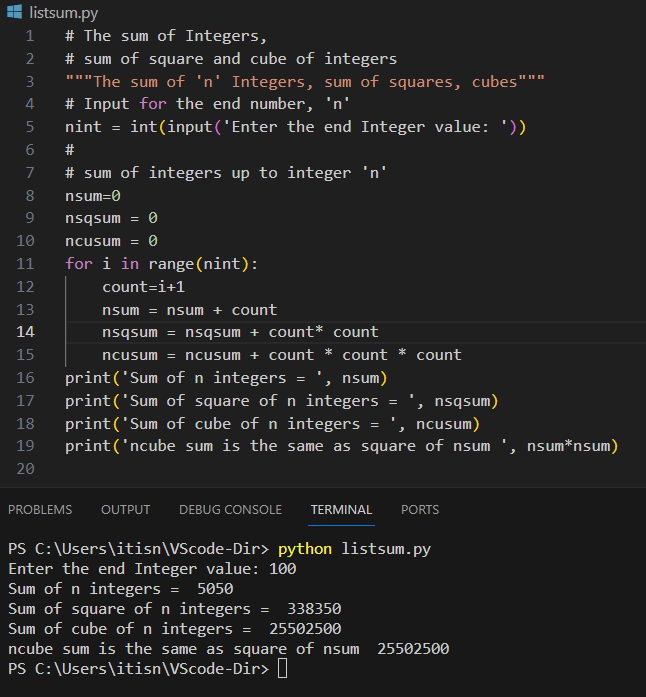

Here is what the workflow actually looks like now. No spark.read... or dd.read_csv.

import pandas as pd

import bodo

import time

# This decorator is where the heavy lifting happens

@bodo.jit

def process_heavy_data(filename):

# Just read it. No lazy execution.

df = pd.read_parquet(filename)

# A heavy aggregation that usually kills memory

df['date'] = pd.to_datetime(df['timestamp'])

monthly_stats = df.groupby([df['date'].dt.month, 'category']).agg(

total_revenue=('revenue', 'sum'),

avg_latency=('latency', 'mean'),

unique_users=('user_id', 'nunique')

)

# Complex filtering

high_value = monthly_stats[monthly_stats['total_revenue'] > 10000]

return high_value

start = time.time()

# Bodo automatically parallelizes this across cores (or nodes)

result = process_heavy_data("s3://my-massive-bucket/data_2025.parquet")

print(f"Execution time: {time.time() - start} seconds")That’s it. The code inside the function is just… Python. If you run this without the decorator, it runs as standard Pandas (and probably crashes). With the decorator, Bodo compiles it and distributes the workload across cores automatically.

It’s Not All Sunshine and Rainbows

I don’t want to sound like a fanboy here. There are rough edges. There always are.

First off, compilation time is real. The first time you run that function, you’re going to sit there for a few seconds (or more) while LLVM does its thing. If you’re iterating quickly on a script, that lag can get annoying. It’s not an interpreter anymore; it’s a build step disguised as a decorator.

Second, type stability is mandatory. Python developers (myself included) are used to playing fast and loose with types. “Oh, this column is integers, but I’ll stick a string in there for the missing values.”

Try that with Bodo and the compiler will scream at you. It needs to know exactly what data types are going where so it can optimize the memory layout. You have to write disciplined Python. Honestly? That’s probably a good thing, but it takes adjustment.

Also, not 100% of the Pandas API is supported yet. It covers the vast majority of what you actually use—read/write, groupbys, joins, filtering, strings—but if you’re using some obscure method from Pandas 0.14 that nobody remembers, it might not compile.

The “Open Source” Factor

This is the part that actually matters to me. Proprietary compilers are cool, but I can’t build a company’s infrastructure on a black box that requires a contract negotiation to scale. By opening this up, Bodo is positioning itself as a legitimate default for data processing.

It reminds me of when Dask first started gaining traction. There was a moment where we realized, “Wait, I don’t need Hadoop?” Now, the realization is, “Wait, I don’t need a cluster manager?”

If I can run a 500GB job on a single massive workstation (or a small cluster) using native MPI speeds, without rewriting my code, why would I touch Spark? Spark was built for a world where RAM was expensive and disk was slow. In 2026, I can rent an instance with 2TB of RAM for a few bucks an hour. The hardware changed, and our software stack is finally catching up.

My Takeaway

I’m going to spend the weekend porting a few of my sluggish ETL pipelines to this. The promise of keeping my Pandas syntax while getting bare-metal performance is too good to ignore.

Will it replace everything? No. Spark has a massive ecosystem. But for pure data processing tasks where you just want to crunch numbers fast and get out? This might be the new standard. And frankly, any tool that saves me from debugging Java stack traces is a winner in my book.