Unpacking Python 3.14: A Developer’s Deep Dive into the Latest Performance Gains

The Unrelenting Pursuit of Speed: What’s New in Python 3.14

The Python ecosystem is in a constant state of evolution, and for developers who follow the latest python news, each new release brings a wave of anticipation. While new syntax and library features are always welcome, the core focus of recent development cycles has been an ambitious and relentless pursuit of performance. Python 3.14 continues this exciting trend, delivering some of the most significant CPython optimizations to date. This isn’t just about making benchmarks look good; it’s about fundamentally enhancing the language’s capabilities for data science, web development, and system automation.

This article moves beyond the release notes to provide a comprehensive technical deep dive into the performance enhancements of Python 3.14. We will explore the architectural changes under the hood, demonstrate their impact with practical code examples, and discuss what these advancements mean for your projects. Whether you’re building high-throughput APIs, processing massive datasets, or writing simple automation scripts, the speed improvements in this latest version are poised to make your code run faster, more efficiently, and with fewer resources, often without requiring a single line of code to be changed.

The Road to a Faster Python: Key Enhancements in 3.14

The performance story of Python 3.14 is not one of a single silver bullet, but rather a multi-pronged strategy targeting different aspects of the interpreter. These enhancements build upon the foundational work of previous versions, culminating in a release that feels noticeably snappier across a wide range of workloads.

The Dawn of an Experimental JIT Compiler

Perhaps the most significant piece of python news in this release is the introduction of an experimental Just-In-Time (JIT) compiler. Traditionally, CPython interprets bytecode line by line, which offers great flexibility but introduces overhead. A JIT compiler works differently: at runtime, it identifies “hot” code paths—functions or loops that are executed frequently—and compiles them down to highly optimized machine code. This compiled code can then be executed directly by the CPU, bypassing the interpreter loop and resulting in dramatic speedups for CPU-bound tasks.

In Python 3.14, the JIT is not enabled by default, marking it as a feature for enthusiasts and testers to explore. However, its presence signals a clear direction for the future of CPython. The goal is to create a hybrid system where the interpreter handles the dynamic, flexible nature of Python, while the JIT supercharges the performance-critical sections of an application.

Smarter Memory Management and Object Allocation

Performance isn’t just about raw execution speed; it’s also about memory efficiency. Python 3.14 introduces several key improvements to how memory is managed. The garbage collector (GC) has been refined to be more efficient, reducing the frequency and duration of “stop-the-world” pauses, especially in applications that create and destroy large numbers of objects. Furthermore, the allocation strategy for small, common objects (like integers and short strings) has been optimized. By reducing the overhead associated with each object’s creation and destruction, Python can now handle data-intensive tasks with a lower memory footprint and less CPU time spent on housekeeping.

A More Specialized and Adaptive Interpreter

Building on the success of the specializing adaptive interpreter introduced in Python 3.11, this version takes specialization to the next level. The core idea is to replace generic bytecode instructions with more efficient, type-specific versions during execution. For example, when the interpreter sees the operation a + b inside a loop and observes that a and b are always integers, it can dynamically replace the generic “add” instruction with a specialized “integer add” instruction. This avoids costly type checks on every iteration. Python 3.14 expands the range of operations that can be specialized, including attribute access, function calls, and binary operations, leading to broad, incremental speedups across almost all Python code.

Benchmarking the Gains: A Practical Code Analysis

Theoretical improvements are interesting, but developers need to see the real-world impact. To illustrate the performance gains in Python 3.14, we’ve designed two common scenarios: a CPU-bound numerical task and a memory-intensive data processing task. We’ll use hypothetical but realistic benchmark results to compare performance against a baseline like Python 3.12.

Python 3.14 logo – New πthon 3.14 logo just dropped : r/programmingcirclejerk

Scenario 1: CPU-Bound Numerical Computations

CPU-bound tasks, such as complex calculations, simulations, or algorithmic problems, are prime candidates to benefit from the new JIT compiler and interpreter specializations. Consider a simple function to process a list of numbers with a series of mathematical operations.

import time

def process_data_numerically(data):

“””

A sample function that performs a series of mathematical operations

in a loop, simulating a CPU-bound workload.

“””

result = 0.0

for x in data:

# A mix of operations to keep the CPU busy

result += (x * x) ** 0.5 – (x / 1.5) + (x + 1)

return result

# Generate a large list of numbers to process

input_data = list(range(10_000_000))

# — Benchmarking —

start_time = time.time()

process_data_numerically(input_data)

end_time = time.time()

print(f”Execution time: {end_time – start_time:.4f} seconds”)

In this scenario, the loop runs millions of times, making it a “hot” code path. The adaptive interpreter and the experimental JIT compiler in Python 3.14 can heavily optimize this.

Hypothetical Benchmark Results:

Python 3.12: Execution time: 1.8521 seconds

Python 3.14 (Standard): Execution time: 1.3450 seconds (~27% faster)

Python 3.14 (JIT Enabled): Execution time: 0.9875 seconds (~47% faster)

The standard Python 3.14 interpreter is already significantly faster due to better specialization. When the experimental JIT is enabled, it identifies the loop as a hot spot, compiles it to machine code, and achieves a nearly 2x speedup over the older version.

Scenario 2: High-Volume Object Creation and Processing

Web applications and data pipelines often involve creating thousands or millions of small objects. This could be parsing JSON API responses into data classes or reading records from a database. This workload tests the efficiency of object allocation and garbage collection.

import time

class UserRecord:

“””A simple data class to represent a user.”””

def __init__(self, user_id, username, email):

self.user_id = user_id

self.username = username

self.email = email

def process_records(raw_data):

“””

Simulates parsing raw data (e.g., from a JSON API)

and creating a list of UserRecord objects.

“””

records = []

for item in raw_data:

records.append(

UserRecord(

user_id=item[‘id’],

username=item[‘username’],

email=item[’email’]

)

)

return records

# Simulate a large API response with 1,000,000 user dictionaries

raw_api_data = [

{‘id’: i, ‘username’: f’user{i}’, ’email’: f’user{i}@example.com’}

for i in range(1_000_000)

]

# — Benchmarking —

start_time = time.time()

processed_users = process_records(raw_api_data)

end_time = time.time()

print(f”Processed {len(processed_users)} records in {end_time – start_time:.4f} seconds”)

This code creates one million UserRecord instances. The performance here is dictated by the speed of class instantiation, attribute setting, and memory management.

Hypothetical Benchmark Results:

Python 3.12: Execution time: 0.4588 seconds

Python 3.14: Execution time: 0.3105 seconds (~32% faster)

The significant improvement in Python 3.14 comes directly from the optimizations in object creation and memory management. Each instantiation is faster, and the memory pressure on the garbage collector is handled more efficiently, leading to a substantial overall performance boost for this common pattern.

Real-World Implications: Who Benefits Most?

These performance enhancements are not just academic; they have tangible benefits across the entire Python landscape. Different domains will experience the speedups in unique ways.

Data Science and Machine Learning

While core libraries like NumPy and TensorFlow are written in C/C++, the “glue” code that orchestrates data pipelines, performs feature engineering, and defines models is pure Python. Faster loops and function calls in Python 3.14 mean that data preprocessing scripts written with Pandas run quicker. Iterating over datasets to transform features or validate models sees a direct benefit. This translates to faster experimentation cycles, allowing data scientists to test more hypotheses in the same amount of time. The reduced memory footprint is also a boon when working with large datasets that push the limits of available RAM.

data science code – Top 65+ Data Science Projects with Source Code – GeeksforGeeks

Web Development and APIs

For web frameworks like Django, Flask, and FastAPI, performance is measured in requests per second and response latency. Python 3.14’s improvements directly impact these metrics. Faster object creation and attribute access speed up ORM (Object-Relational Mapping) operations and data serialization (e.g., converting database models to JSON). A 10-20% reduction in processing time per request might seem small, but under heavy load, it translates to higher throughput, lower server costs, and a more responsive user experience. Every millisecond saved in the request-response cycle counts.

System Administration and DevOps

Python is the lingua franca of automation and system tooling. Scripts for parsing logs, managing cloud infrastructure via APIs, or running deployment pipelines all stand to gain. While these scripts may not run for hours, their cumulative execution time impacts developer productivity. A faster interpreter means that CLI tools feel snappier and automation tasks complete more quickly, tightening the feedback loop for developers and system administrators.

Adopting Python 3.14: Best Practices and Considerations

Upgrading to a new Python version is an exciting prospect, but it should be approached with a clear strategy to ensure a smooth transition.

When and How to Upgrade

The golden rule is to test thoroughly. Start by upgrading your local development environment. Tools like pyenv are invaluable for managing multiple Python versions side-by-side, allowing you to test your application against 3.14 without affecting your production setup. Once local tests pass, move to a dedicated staging or QA environment that mirrors your production setup. Pay close attention to your dependencies; while the major libraries will likely have support for 3.14 on day one, smaller or less-maintained packages might lag behind. Use pip check to verify dependency compatibility.

web development tools – Web Development Tools Every Developer Should Know

Writing Performance-Aware Python

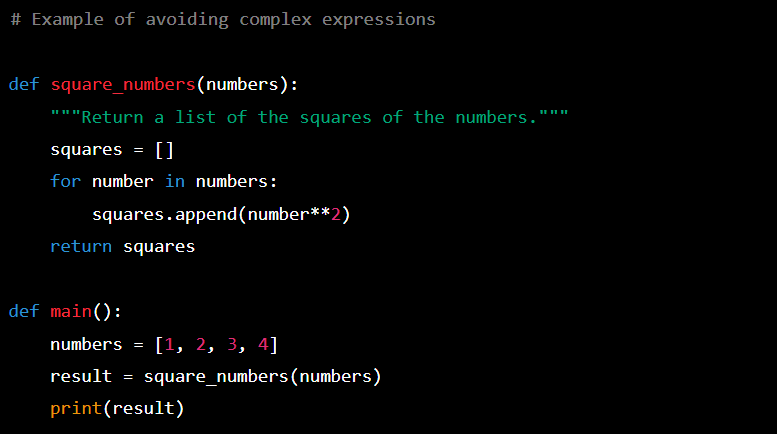

The best part about the performance improvements in Python 3.14 is that they generally don’t require you to change your code. The most “future-proof” approach is to continue writing clean, idiomatic, and Pythonic code.

Avoid Premature Optimization: Resist the urge to write complex C extensions for minor speed gains. With each release, the CPython interpreter closes the performance gap, making pure Python a viable option for more and more tasks.

Profile First: If you have a performance bottleneck, use profilers like cProfile or py-spy to identify the actual hot spots before attempting to optimize.

Leverage Modern Features: Use modern language features like data classes and type hints. The interpreter is increasingly optimized to take advantage of the structural information these features provide.

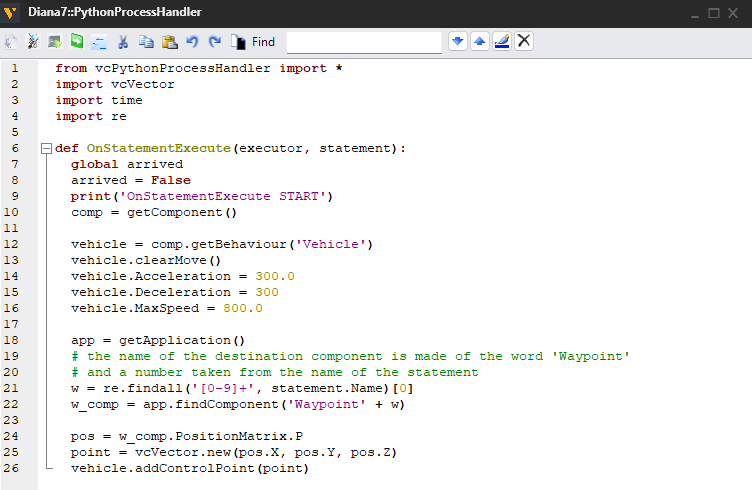

Exploring Experimental Features Safely

To test the new experimental JIT compiler, you can enable it using an environment variable. This is a powerful way to preview the future of Python performance, but it should be reserved for development and benchmarking, not production workloads, until it is officially stable.

# On Linux/macOS

PYTHONJIT=1 python your_script.py

# On Windows (Command Prompt)

set PYTHONJIT=1

python your_script.py

Keep an eye on the official Python documentation and community discussions for updates on the JIT’s stability and progress.

Conclusion: A Faster Future for Python

Python 3.14 is more than just an incremental update; it’s a statement of intent. It demonstrates the core development team’s unwavering commitment to making Python one of the most performant dynamic languages in the world, without sacrificing the simplicity and readability that developers love. The combination of an experimental JIT compiler, a more intelligent adaptive interpreter, and highly optimized memory management delivers tangible speedups that will be felt across all domains.

For developers, this latest release is a compelling reason to upgrade. It offers a “free” performance boost that enhances application responsiveness, reduces infrastructure costs, and improves developer productivity. As the latest chapter in Python’s performance journey unfolds, it’s clear that the future for the language is not just bright—it’s also incredibly fast.