Keras in Late 2025: Why Transfer Learning Is Finally Boring

I spent yesterday afternoon trying to squeeze a Vision Transformer (ViT) onto a consumer-grade GPU. A few years ago, this would have been a three-coffee problem involving obscure CUDA errors, shape mismatches, and probably a rage-quit or two. But yesterday? It took about twenty minutes.

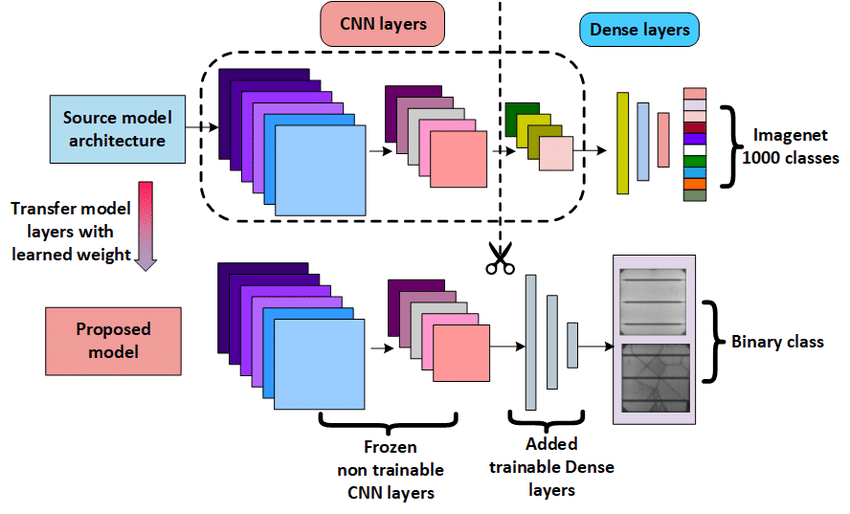

Transfer learning used to be the “hello world” of deep learning tutorials, but in production, it was often a mess of glue code and framework lock-in. If you found a great pre-trained model in PyTorch but your deployment pipeline was TensorFlow-based, you were in for a bad time.

Fast forward to the end of 2025, and Keras has quietly fixed the plumbing. The updates over the last year—specifically the maturity of Keras 3 and its ecosystem—have turned what used to be a headache into something almost boring. And in engineering, boring is exactly what we want.

The Backend Wars Are Over (For Us, Anyway)

The biggest shift I’ve felt this year isn’t a specific new layer or optimizer—it’s the backend agnosticism actually working as advertised. When Keras 3 launched, it promised we could run the same code on JAX, TensorFlow, or PyTorch. I was skeptical. I expected edge cases everywhere.

But after using the latest releases for a few months, I’m eating my words. I can now grab a pre-trained model, write my training loop in Keras, and swap the backend based on what hardware I have available.

Here is the kicker: I recently needed to fine-tune a model using JAX (because XLA compilation is still king for speed on TPUs) but deploy it using TensorFlow Lite. In the past, this was a nightmare. Now? It’s just a config change.

import os

# This is the magic switch.

# In 2025, switching this actually works without breaking your data pipeline.

os.environ["KERAS_BACKEND"] = "jax"

import keras

import keras_cv

import numpy as np

# Load a pre-trained model (EfficientNet, ViT, etc.)

# Note: No 'tensorflow.keras' imports. Just 'keras'.

base_model = keras_cv.models.EfficientNetV2B0(

include_rescaling=True,

include_top=False,

pretrained=True

)

# Freeze the base

base_model.trainable = False

# Functional API is still the best way to stitch things together

inputs = keras.Input(shape=(224, 224, 3))

x = base_model(inputs, training=False)

x = keras.layers.GlobalAveragePooling2D()(x)

outputs = keras.layers.Dense(10, activation="softmax")(x)

model = keras.Model(inputs, outputs)

# This compiles to XLA if you're on JAX, or standard graphs on TF

model.compile(

optimizer="adam",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"]

)Native PEFT: The 2025 Quality of Life Update

If you were doing transfer learning in 2023 or 2024, you probably just froze the backbone and trained the head. That works, but it’s crude. We all knew Parameter-Efficient Fine-Tuning (PEFT) methods like LoRA (Low-Rank Adaptation) were better, but implementing them meant wrestling with third-party libraries or writing custom wrapper classes.

The most underrated update in the recent Keras releases is how native LoRA has become. It’s no longer a hack; it’s a first-class citizen in the API.

I stopped freezing layers entirely for most of my image classification tasks. Instead, I just inject low-rank adapters. It keeps the memory footprint tiny—I can fine-tune massive models on my laptop—without the performance penalty of freezing 99% of the weights.

Here is how I’m handling it these days:

# The 'modern' way to fine-tune in late 2025

# Instead of model.trainable = False, we enable LoRA on specific layers

# Assume 'base_model' is loaded as before

# We can target dense layers or attention projections automatically

base_model.enable_lora(rank=4)

# Verify trainable weights - it should be a tiny fraction of the total

base_model.summary()

# Now when you fit, you're only updating the adapter weights.

# It's faster, uses less VRAM, and usually converges to a better loss.

model.fit(train_dataset, epochs=5)This snippet simplifies things, but the logic holds. The API abstraction hides the nasty matrix algebra we used to write manually. It just works.

Quantization Aware Training (QAT) Finally Clicked

Deployment is where my enthusiasm usually dies. You train a beautiful model, get 98% accuracy, and then the quantization process destroys it. You end up with a 40MB file that thinks every picture of a cat is a toaster.

The keras.quantization namespace has seen some serious love recently. Post-training quantization is fine, but Quantization Aware Training (QAT) is necessary if you care about precision on edge devices.

I tried the new QAT workflow last week on a project for a client who needed a flower classifier running on a low-power embedded board. The API now allows you to wrap the model in a quantization scheme before that final fine-tuning epoch. It simulates the precision loss during training so the weights adjust accordingly.

The result? I kept 99% of the float32 accuracy in int8 format. No magic, just better tooling.

It’s Not All Sunshine

I don’t want to sound like a fanboy here. There are still rough edges.

Debugging errors when using the JAX backend can still be cryptic. You get a stack trace that looks like it went through a blender. And while the ecosystem is unifying, finding tutorials that use the new Keras 3 syntax specifically for 2025 features is hit-or-miss. Google search results are still clogged with code from 2019 using tf.keras and outdated methods.

Also, the keras.export functionality, while promising, sometimes fights with custom layers if you aren’t careful about defining your get_config methods properly. I lost an hour yesterday because I forgot to serialize a custom dropout parameter.

![Transfer learning diagram - What Is Transfer Learning? [Examples & Newbie-Friendly Guide]](https://python-news.com/wp-content/uploads/2025/12/inline_d34ce1e7.webp)

Why This Matters Now

We are past the hype cycle of “AI can do anything” and deep into the “how do we actually ship this cheaply?” phase. The updates to Keras in 2025 aren’t about flashy new architectures; they are about efficiency and portability.

If you haven’t looked at your transfer learning pipeline in a year, you’re probably writing too much code. The ability to swap backends means you aren’t locked into NVIDIA hardware anymore—you can develop on your MacBook (Metal/MPS support is solid now) and deploy to a TPU pod or a generic server CPU without rewriting your model definition.

My advice? Go delete your old helper scripts. The framework handles it now.