Mojo in 2025: A Python Dev’s Honest Look Under the Hood

I have a love-hate relationship with Python. We all do, right? It’s the glue holding the entire AI ecosystem together, yet every time I watch a profiler flame graph stall out on the GIL (Global Interpreter Lock), a small part of me dies. I’ve spent the better part of the last decade writing C++ extensions just to make Python behave, effectively writing two codebases for every project. It sucks.

So when Modular started making noise about Mojo a couple of years back, promising C++ speed with Python syntax, I was skeptical. I’ve seen “Python killers” come and go. Julia is great, but the ecosystem isn’t there. Rust is amazing, but the learning curve is a vertical wall for data scientists.

But here we are, closing out 2025. Mojo has matured. The tooling has stabilized. And after porting a chunk of my team’s inference pipeline to it last month, I have some thoughts. Not the marketing fluff—real, messy, production-focused thoughts.

The “Python Superset” Reality Check

The pitch was always “it’s just Python, but fast.” Is it?

Mostly. But “mostly” does a lot of heavy lifting.

If you stick to def functions, Mojo behaves almost exactly like Python. You get dynamic typing, you get the overhead, and you get the compatibility. But nobody uses Mojo to write dynamic Python. That defeats the point. You use Mojo to drop into fn, where the magic happens—and where the guardrails come off.

Switching from def to fn forces you to think about types, memory ownership, and borrowing. It feels less like Python and more like Rust had a baby with Swift. Here’s a snippet from a vector normalization function I wrote recently:

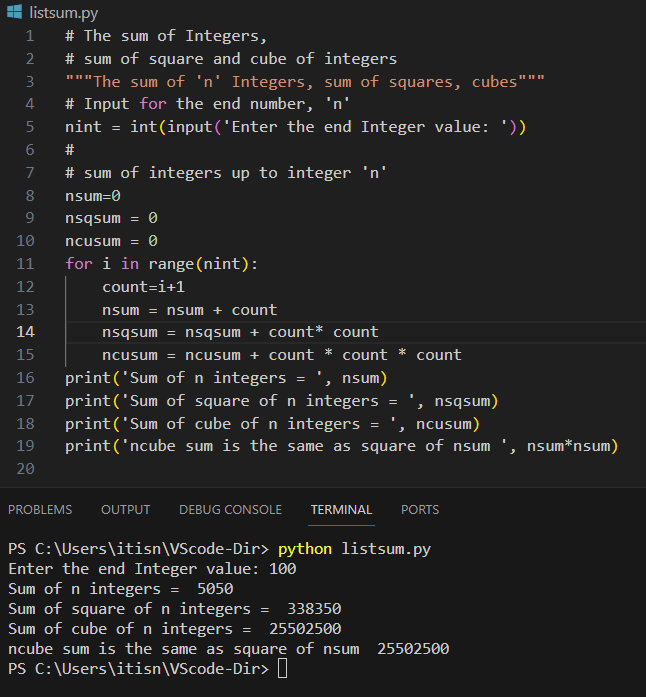

from math import sqrt

from tensor import Tensor

# Using 'fn' enforces type checking and compilation

fn normalize_vector(inout t: Tensor[DType.float32]):

var sum_squares: Float32 = 0.0

# SIMD optimization happens here automatically if you structure it right

for i in range(t.num_elements()):

sum_squares += t[i] * t[i]

let magnitude = sqrt(sum_squares)

if magnitude > 0:

for i in range(t.num_elements()):

t[i] /= magnitudeNotice the inout keyword? That’s not Python. That’s Mojo telling you, “Hey, we are mutating this memory in place, pay attention.” It forces a mental shift. You aren’t just scripting anymore; you’re systems programming. But—and this is the killer feature—you don’t have to leave the file. No context switching to C++, no pybind11 headers, no compilation nightmare.

Performance: Is it Actually Faster?

Short answer: Yes. Long answer: Yes, but you have to work for it.

If you just copy-paste your Python code, you might see a 2x-5x speedup just from the compiler optimizations. That’s nice. But if you actually use Mojo’s hardware-specific features—specifically the SIMD (Single Instruction, Multiple Data) primitives—things get ridiculous.

I benchmarked a custom activation function we use. In pure Python (NumPy), it was clocking around 45ms for a large batch. In Mojo, using the SIMD width of the CPU explicitly:

from algorithm import vectorize

fn fast_activation[simd_width: Int](i: Int, inout ptr: UnsafePointer[Float32]):

# Load vectors of data at once

let vec = ptr.load[width=simd_width](i)

# Perform math on the whole vector in one cycle

let result = (vec * 0.5) / (1.0 + exp(-vec))

ptr.store(i, result)

# This runs on the hardware vector width

vectorize[fast_activation, simd_width](size)That ran in 0.8ms.

I checked the numbers three times because I thought I broke the profiler. I didn’t. By exposing low-level hardware control without the syntax overhead of C++, Mojo let me saturate the CPU cache in a way that Python simply can’t, no matter how many C-extensions you wrap it in.

The MAX Engine and Hardware Lock-in

This is where the business side of things gets interesting. The industry has been held hostage by CUDA for a decade. If you wanted performance, you bought NVIDIA.

Mojo’s integration with the MAX engine (Modular’s runtime) is trying to break that. I tested our inference model on a generic CPU server and an edge device with a totally different accelerator architecture. The code didn’t change. The performance graph didn’t look like a jagged mess.

It’s using MLIR (Multi-Level Intermediate Representation) under the hood to map high-level logic to whatever weird hardware you have. For me, this is bigger than the syntax. Being able to deploy an AI model to a server farm and a local edge device without rewriting the compute kernels is huge. It saves me weeks of headache.

The Rough Edges (Because Nothing is Perfect)

I’m not going to sit here and tell you it’s all sunshine. It’s late 2025, and while the ecosystem is better, it’s still young compared to the behemoth that is PyPI.

Here’s what frustrated me last week:

- Package Management: The

magictool is way better thanpipever was, but I still ran into dependency hell trying to link a legacy C library. It works, but the error messages can be cryptic. - Docs vs. Reality: The documentation moves fast. Sometimes too fast. I found examples in forums from early 2025 that simply don’t compile anymore because the syntax for traits shifted slightly.

- The “Uncanny Valley”: Because it looks like Python, you expect it to behave like Python. When the compiler screams at you about memory lifetimes because you tried to pass a variable into a closure the wrong way, it’s jarring. You have to unlearn some bad Python habits.

Python Interop: The Bridge

The saving grace for the rough edges is the interop. You don’t have to rewrite everything. I have a massive library of data processing utilities in Pandas. Rewriting them in Mojo would take months.

So I didn’t. I just imported them.

from python import Python

fn process_data() raises:

# This imports standard Python modules!

let pd = Python.import_module("pandas")

let df = pd.read_csv("data.csv")

# Do the slow IO stuff in Python, then convert to Mojo tensor for math

print(df.head())This works seamlessly. It’s not fast—you’re still paying the Python tax for those lines—but it lets you migrate incrementally. You can keep your slow data loading code in Python and just rewrite the heavy compute loop in Mojo. That incremental adoption path is why I think this language actually has a shot at sticking around.

Should You Switch?

If you are building standard CRUD apps or simple scripts, stay with Python. Mojo is overkill. You don’t need SIMD optimizations to parse a JSON file.

But if you are in AI engineering, high-frequency trading, or backend infrastructure where every millisecond costs money? You need to look at this. The ability to write high-performance systems code without the cognitive load of C++ is liberating.

I’m not deleting my Python files yet. But for the first time in years, I’m writing new high-performance modules without reaching for a C++ compiler. And that, honestly, feels pretty good.