Building a Lightweight Semantic Layer with DuckDB Python and Ibis

Introduction: The Evolution of Local Analytics

The landscape of data engineering has shifted dramatically in recent years. We are moving away from the paradigm where every byte of data, regardless of size, must be shipped to a cloud data warehouse. With the advent of powerful local hardware and optimized software, “local-first” analytics has become a viable, and often superior, strategy for datasets ranging from mere megabytes to hundreds of gigabytes. At the center of this revolution is **DuckDB Python**, an in-process SQL OLAP database management system that acts as the “SQLite for analytics.”

While tools like the **Polars dataframe** library have redefined in-memory processing speed, DuckDB offers a unique proposition: it provides a full SQL engine that runs within your Python process, capable of handling data larger than RAM through efficient disk spilling. However, raw SQL queries embedded in Python strings can quickly become unmanageable “spaghetti code.” This brings us to the concept of a semantic layer—a method to define metrics and business logic in a structured way, decoupling definitions from execution.

In this comprehensive guide, we will explore how to combine DuckDB with the **Ibis framework** to build a lightweight, portable semantic layer. We will discuss how to define metrics using configuration files (like YAML), execute them efficiently over millions of records (such as NYC Taxi data), and integrate modern Python tooling like the **Uv installer** and **Ruff linter** to maintain code quality. Whether you are interested in **algo trading**, **edge AI**, or simply automating reports, this architecture provides a robust foundation.

Section 1: The Core Stack – DuckDB, Ibis, and Modern Python

Before diving into the semantic layer, it is crucial to understand the components. DuckDB provides the engine, but interacting with it via raw SQL strings is error-prone. This is where **Ibis** comes in. Ibis is a portable Python dataframe library that allows you to write Pythonic code that compiles down to SQL. It supports multiple backends, including DuckDB, BigQuery, and Snowflake, allowing you to write your logic once and run it anywhere.

To get started, we need a modern Python environment. The Python packaging ecosystem has evolved; tools like **Rye manager**, **PDM manager**, and specifically the **Uv installer** have made dependency management significantly faster.

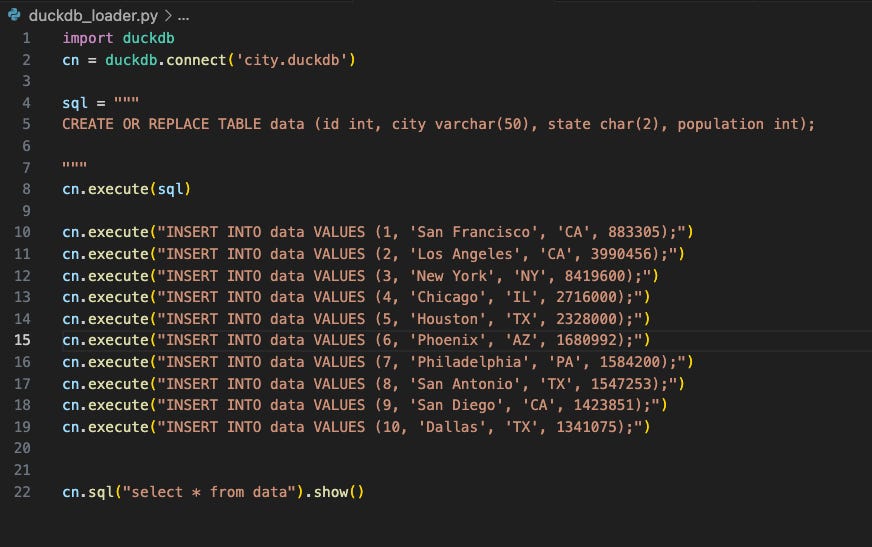

Let’s look at a basic setup where we ingest data using DuckDB and manipulate it using Ibis. This approach is often superior to **Pandas updates** for larger-than-memory datasets because of lazy evaluation.

import duckdb

import ibis

import pandas as pd

from datetime import datetime

# Initialize a DuckDB connection

# We use an in-memory database for this example, but this could be a file path

con = duckdb.connect(database=':memory:')

# Generate some dummy data using Pandas

# In a real scenario, this could be Parquet files, CSVs, or Arrow streams

df = pd.DataFrame({

'pickup_datetime': [datetime(2024, 1, 1, 10, 0, 0), datetime(2024, 1, 1, 11, 0, 0)],

'dropoff_datetime': [datetime(2024, 1, 1, 10, 30, 0), datetime(2024, 1, 1, 11, 45, 0)],

'passenger_count': [1, 2],

'trip_distance': [5.5, 12.2],

'total_amount': [25.0, 55.0]

})

# Register the dataframe with DuckDB

con.register('taxi_trips', df)

# Connect Ibis to the DuckDB connection

# This allows us to use the Ibis API to generate SQL queries

conn = ibis.duckdb.connect(con)

trips = conn.table('taxi_trips')

# A Pythonic query compiling to SQL

# We calculate trip duration and filter based on it

query = (

trips

.mutate(duration_minutes=(trips.dropoff_datetime - trips.pickup_datetime).minute)

.filter(trips.passenger_count > 0)

.select('trip_distance', 'total_amount', 'duration_minutes')

)

# Execute and convert to a dataframe (Polars or Pandas)

result = query.execute()

print(result)

# Inspect the generated SQL

print("\nGenerated SQL:")

print(ibis.to_sql(query))This snippet demonstrates the power of the ecosystem. We aren’t just writing SQL; we are building expression trees. This is critical for **Python automation** and **Python finance** workflows where auditability and debugging are paramount.

Section 2: Implementing a Semantic Layer with YAML

Executive leaving office building – Exclusive | China Blocks Executive at U.S. Firm Kroll From Leaving …

A semantic layer serves as a translator between business language (Revenue, Churn, Active Users) and data language (SUM(amount), COUNT(DISTINCT user_id)). Hardcoding these definitions in Python scripts makes them difficult for non-engineers to read or modify. A popular pattern is to externalize these definitions into YAML files.

This approach is highly relevant for **Python testing** and **Python security**. By isolating logic in configuration files, you reduce the surface area for injection attacks and make it easier to validate logic without running the entire pipeline.

Below is a practical implementation of a simple semantic layer. We will define metrics in a YAML string (simulating a file) and dynamically generate Ibis expressions to query DuckDB.

import yaml

import ibis

from typing import Dict, Any, List

# 1. The Configuration (The Semantic Definition)

# In a real app, this would be loaded from a 'metrics.yaml' file.

yaml_config = """

sources:

- name: taxi_data

table_name: parquet_scan('taxi_data.parquet')

metrics:

- name: total_revenue

description: "Sum of all trip amounts"

expression: "sum(total_amount)"

- name: avg_trip_distance

description: "Average distance per trip"

expression: "mean(trip_distance)"

- name: high_value_ratio

description: "Ratio of trips over $50"

expression: "mean(total_amount > 50)"

"""

class SemanticLayer:

def __init__(self, config_str: str, con: ibis.BaseBackend):

self.config = yaml.safe_load(config_str)

self.con = con

self.metrics_map = {m['name']: m for m in self.config['metrics']}

def get_metric_expression(self, table_expr, metric_name: str):

"""

Dynamically builds an Ibis expression from the string definition.

Note: For production, use a safer parser than eval(),

or map specific keywords to Ibis functions.

"""

metric_def = self.metrics_map.get(metric_name)

if not metric_def:

raise ValueError(f"Metric {metric_name} not found")

# We bind the table expression to a local variable 't'

# so the eval string can reference columns like 't.column_name'

# However, Ibis allows direct column reference if the context is clear.

# Here we parse simple aggregations.

expr_str = metric_def['expression']

# Simple parser logic for demonstration:

# transforming "sum(total_amount)" -> table_expr.total_amount.sum()

if "sum(" in expr_str:

col = expr_str.split("(")[1].strip(")")

return table_expr[col].sum().name(metric_name)

elif "mean(" in expr_str:

# Handling boolean logic inside mean requires more complex parsing

# This is a simplified example.

col = expr_str.split("(")[1].strip(")")

if ">" in col:

parts = col.split(">")

return (table_expr[parts[0].strip()] > float(parts[1])).mean().name(metric_name)

return table_expr[col].mean().name(metric_name)

return None

# Mocking the data context

con = duckdb.connect()

# Create a dummy table to represent the parquet scan

con.execute("CREATE TABLE taxi_data (total_amount DOUBLE, trip_distance DOUBLE)")

con.execute("INSERT INTO taxi_data VALUES (10.0, 2.5), (60.0, 15.0), (25.0, 5.0)")

ibis_con = ibis.duckdb.connect(con)

table = ibis_con.table('taxi_data')

# Initialize Layer

layer = SemanticLayer(yaml_config, ibis_con)

# Calculate Metrics

metrics_to_compute = ['total_revenue', 'high_value_ratio']

exprs = [layer.get_metric_expression(table, m) for m in metrics_to_compute]

# Execute aggregation

# Ibis aggregates efficiently in one pass

result = table.aggregate(exprs).execute()

print("Semantic Layer Results:")

print(result)This pattern allows data analysts to modify YAML files to change business logic without touching the underlying Python code. It aligns with modern **Type hints** and **MyPy updates** by allowing you to strictly type the parser logic, ensuring robustness.

Section 3: Advanced Techniques – Interoperability and Performance

When dealing with 20M+ records, performance tuning becomes essential. While DuckDB is fast, data movement between Python and the database engine can be a bottleneck. This is where **PyArrow updates** shine. DuckDB supports zero-copy data transfer using the Arrow format.

Furthermore, the Python ecosystem is currently undergoing a massive shift with **GIL removal** (Global Interpreter Lock) in upcoming versions (Free threading). While DuckDB releases the GIL for heavy lifting, integrating with other libraries that might be CPU-bound benefits from these CPython internal changes.

Integration with Vector Search and AI

With the rise of **Local LLM** and **Edge AI**, DuckDB is increasingly used as a vector store or a pre-processing engine for RAG (Retrieval-Augmented Generation) pipelines involving **LangChain updates** and **LlamaIndex news**. You can use DuckDB to filter massive datasets before passing them to a transformer model.

Here is an example of advanced interoperability: using DuckDB to process data, converting it to Arrow, and passing it to a mock machine learning processing step (simulating a **Scikit-learn updates** or **PyTorch news** workflow).

import duckdb

import pyarrow as pa

import pyarrow.parquet as pq

import numpy as np

# Simulate a large dataset creation

# In production, this might be read via 'read_parquet'

con = duckdb.connect()

con.execute("CREATE TABLE sensor_data AS SELECT range AS id, random() AS value, random() * 100 AS temperature FROM range(100000)")

def process_batch(arrow_batch):

"""

Simulate a CPU-intensive ML task or complex validation.

This could be a custom Rust Python extension or a NumPy operation.

"""

# Zero-copy conversion to NumPy

temps = arrow_batch['temperature'].to_numpy()

# Vectorized operation (much faster than Python loops)

# Simulating anomaly detection

anomalies = temps > 95.0

return np.sum(anomalies)

# Query DuckDB and output as an Arrow RecordBatchReader

# This avoids materializing the entire dataset in Python RAM

arrow_reader = con.execute("SELECT * FROM sensor_data").fetch_record_batch()

total_anomalies = 0

# Process in chunks (streaming)

while True:

try:

batch = arrow_reader.read_next_batch()

total_anomalies += process_batch(batch)

except StopIteration:

break

print(f"Total anomalies detected: {total_anomalies}")

# Advanced: Exporting to Parquet for other tools

# DuckDB handles this natively and efficiently

con.execute("COPY (SELECT * FROM sensor_data WHERE temperature > 90) TO 'high_temp.parquet' (FORMAT PARQUET)")This streaming approach is vital for **malware analysis** or **log analysis** where datasets exceed memory limits. It effectively utilizes the strengths of **NumPy news** regarding SIMD optimizations while letting DuckDB handle the I/O.

Section 4: Best Practices and Productionizing

Building the script is only half the battle. To make your DuckDB semantic layer production-ready, you must adopt modern software engineering practices.

1. Linting and Formatting

The days of messy Python scripts are over. You should enforce code quality using the **Ruff linter**, which is an extremely fast replacement for Flake8 and is written in Rust (similar to the **Mojo language** philosophy of high performance). Combine this with the **Black formatter** to ensure consistent style.

2. Testing

Use **Pytest plugins** to validate your semantic definitions. You can write tests that load your YAML configuration, run queries against a small in-memory DuckDB instance with known data, and assert that the metrics return the expected values. This prevents regression when business logic changes.

3. Serving the Data

Executive leaving office building – Exclusive | Bank of New York Mellon Approached Northern Trust to …

Once your semantic layer computes the metrics, you often need to serve them via an API. **FastAPI news** frequently highlights its synergy with async database drivers. While DuckDB is primarily synchronous, running it inside a **Litestar framework** or FastAPI application (potentially offloaded to a thread pool) allows you to build high-performance data microservices.

4. Visualization

For interactive exploration, move beyond standard Jupyter notebooks. **Marimo notebooks** offer a reactive programming environment that is reproducible and Git-friendly, solving many of the hidden state issues common in data science. Alternatively, for building web UIs around your data, **Flet ui** and **Taipy news** offer pure Python ways to create dashboards without learning JavaScript.

Below is a snippet showing how to wrap our logic in a simple API endpoint using FastAPI:

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

import duckdb

app = FastAPI(title="DuckDB Semantic Layer API")

# Initialize DB once (read-only for API serving)

con = duckdb.connect(database=':memory:', read_only=False)

con.execute("CREATE TABLE sales (id INT, amount DOUBLE, region VARCHAR)")

con.execute("INSERT INTO sales VALUES (1, 100.0, 'US'), (2, 150.0, 'EU'), (3, 200.0, 'US')")

class QueryRequest(BaseModel):

region: str

min_amount: float

@app.post("/query-metrics")

async def get_metrics(q: QueryRequest):

"""

Endpoint to retrieve aggregated metrics based on filters.

"""

try:

# Parameterized queries prevent SQL injection—CRITICAL for security

query = """

SELECT

count(*) as tx_count,

sum(amount) as total_vol

FROM sales

WHERE region = ? AND amount > ?

"""

# DuckDB's Python client handles parameter binding safely

result = con.execute(query, [q.region, q.min_amount]).fetchall()

return {

"region": q.region,

"transaction_count": result[0][0],

"total_volume": result[0][1]

}

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

# To run: uvicorn main:app --reloadConclusion

The combination of **DuckDB Python**, the **Ibis framework**, and structured configuration via YAML offers a powerful paradigm for modern data engineering. It allows developers to build a “semantic layer” that is lightweight, runs locally or in the cloud, and scales effortlessly to millions of rows without the overhead of enterprise data warehouses.

By integrating this stack with modern tooling like **Uv**, **Ruff**, and **PyArrow**, you ensure your data pipelines are not only fast but also maintainable and robust. Whether you are performing **algo trading** analysis, preparing data for **Python quantum** experiments with **Qiskit news**, or simply summarizing business metrics, this architecture provides the flexibility and performance required for 2024 and beyond.

As the Python ecosystem continues to evolve with **free threading** and **Rust Python** integrations, tools like DuckDB will only become more integral to the high-performance data stack. Start building your semantic layer today, and experience the efficiency of local-first analytics.