Mastering Modern Keras: Multi-Backend Workflows and Ecosystem Integration

Introduction: The Evolution of Deep Learning Frameworks

The landscape of deep learning has undergone a seismic shift in recent years. While the early days were defined by the rivalry between static graphs and eager execution, the modern era is characterized by interoperability and ecosystem maturity. At the forefront of this evolution is the reimagining of Keras. No longer strictly bound to TensorFlow, modern Keras (specifically Keras 3.0 and beyond) has emerged as a multi-backend high-level API that unifies the strengths of JAX, PyTorch, and TensorFlow.

For data scientists and machine learning engineers, this update is transformative. It allows for the “write once, run anywhere” paradigm to finally apply to deep learning model architectures. You can now debug your model using the intuitive stack traces of PyTorch, train it using the massive parallelization capabilities of JAX, and deploy it using the robust serving infrastructure of TensorFlow. This flexibility is crucial as the Python ecosystem continues to fracture and specialize, with tools ranging from Mojo language optimizations to Rust Python integrations reshaping performance expectations.

In this comprehensive guide, we will explore the technical nuances of these Keras updates. We will dive into backend-agnostic coding, advanced data pipelines utilizing modern tools like Polars dataframe, and deployment strategies involving FastAPI news and Edge AI considerations. We will also touch upon how these updates integrate with the broader Python landscape, including LangChain updates for LLM orchestration and strict code quality standards enforced by the Ruff linter.

Section 1: Core Concepts of Multi-Backend Keras

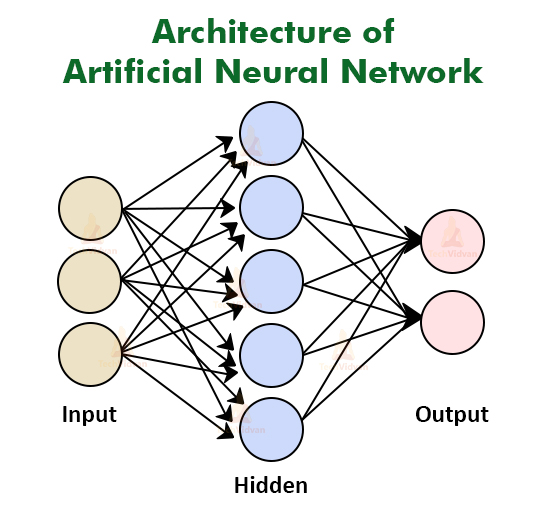

The most significant technical update in the Keras ecosystem is the decoupling of the API from the execution engine. Previously, Keras was synonymous with tf.keras. Now, Keras is a standalone library that delegates computation to a backend of your choice. This is achieved through the keras.ops namespace, which provides a unified interface for mathematical operations (similar to NumPy) that translates to the specific tensor calls of the active backend.

The Backend Switch

The ability to switch backends requires minimal configuration. It is typically handled via an environment variable or a configuration file before the library is imported. This flexibility is vital for benchmarking. For instance, you might want to test if a specific PyTorch news feature regarding compilation offers better training throughput than XLA in JAX for your specific topology.

Here is how you structure a modern Keras script to remain backend-agnostic:

import os

# Select backend: 'jax', 'tensorflow', or 'torch'

# This must be done before importing keras

os.environ["KERAS_BACKEND"] = "jax"

import keras

import keras.ops as ops

import numpy as np

# A simple custom layer using backend-agnostic operations

class MyDense(keras.layers.Layer):

def __init__(self, units, activation=None, **kwargs):

super().__init__(**kwargs)

self.units = units

self.activation = keras.activations.get(activation)

def build(self, input_shape):

# Initialize weights using a backend-agnostic initializer

self.kernel = self.add_weight(

shape=(input_shape[-1], self.units),

initializer="glorot_uniform",

trainable=True,

name="kernel",

)

self.bias = self.add_weight(

shape=(self.units,),

initializer="zeros",

trainable=True,

name="bias",

)

def call(self, inputs):

# Use keras.ops instead of tf.matmul or torch.matmul

output = ops.matmul(inputs, self.kernel) + self.bias

if self.activation:

output = self.activation(output)

return output

# Define a model using the functional API

inputs = keras.Input(shape=(784,))

x = MyDense(64, activation="relu")(inputs)

x = MyDense(64, activation="relu")(x)

outputs = MyDense(10)(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.summary()In the example above, the MyDense layer utilizes keras.ops.matmul. If the backend is set to JAX, this compiles down to jax.numpy.matmul. If switched to PyTorch, it utilizes torch.matmul. This abstraction allows developers to maintain a single codebase while leveraging specific backend features, such as PyTorch news regarding dynamic graphs or TensorFlow’s TFX ecosystem.

Data Loading and Compatibility

Modern Keras pipelines must often interact with diverse data sources. While tf.data remains a powerful tool, the ecosystem has expanded. Data preparation is increasingly moving towards high-performance libraries. Pandas updates have improved performance, but the emergence of the Polars dataframe library (written in Rust) and DuckDB python integration allows for lightning-fast preprocessing before data ever hits the GPU.

When working with Keras, you can feed data in the form of NumPy arrays, TensorFlow tensors, PyTorch DataLoaders, or JAX arrays. This interoperability ensures that if you are using Scikit-learn updates for feature engineering, the transition to the neural network is seamless.

Section 2: Implementation Details and Modern Workflows

Implementing a robust deep learning system goes beyond model definition. It involves environment management, data ingestion, and rigorous testing. The Python tooling landscape has matured significantly in this regard.

Environment Management and Build Tools

Managing dependencies for deep learning is notoriously difficult due to CUDA version mismatches and backend conflicts. Modern Python developers are moving away from standard pip/venv setups toward more robust managers. The Uv installer and Rye manager are gaining traction for their speed and ability to lock dependencies strictly. Similarly, Hatch build and PDM manager offer modern PEP 621 compliant project management.

Ensuring PyPI safety and checking for vulnerabilities in dependencies is now a standard part of the CI/CD pipeline, often integrated with tools like SonarLint python.

Advanced Data Preprocessing with Polars

Let’s look at a practical example of preparing data for a Keras model using Polars, which is significantly faster than traditional methods for large datasets, especially in Algo trading or Python finance applications where time-series data is massive.

import polars as pl

import keras

import numpy as np

def load_and_prep_data(csv_path):

# Lazy evaluation with Polars for memory efficiency

df = (

pl.scan_csv(csv_path)

.filter(pl.col("value") > 0)

.with_columns([

(pl.col("value") - pl.col("value").mean()) / pl.col("value").std().alias("normalized_value")

])

.collect()

)

# Convert to numpy for Keras consumption

# Keras 3 handles numpy arrays seamlessly across all backends

features = df.select(["feature_1", "feature_2", "normalized_value"]).to_numpy()

targets = df.select("target").to_numpy()

return features, targets

# Mocking the training process

# In a real scenario, this connects to Scrapy updates for web data

# or Ibis framework for SQL backends.

features, targets = load_and_prep_data("financial_data.csv")

model = keras.Sequential([

keras.layers.Dense(32, activation="relu"),

keras.layers.Dense(1)

])

model.compile(optimizer="adam", loss="mse")

# The fit method works regardless of whether the backend is JAX, TF, or Torch

model.fit(features, targets, batch_size=32, epochs=5)This workflow highlights the integration of high-performance CPU preprocessing with deep learning. By leveraging tools like Polars or the Ibis framework (which provides a portable Python dataframe API), developers can bottleneck-proof their data loading stages.

Section 3: Advanced Techniques: GenAI and LLMs

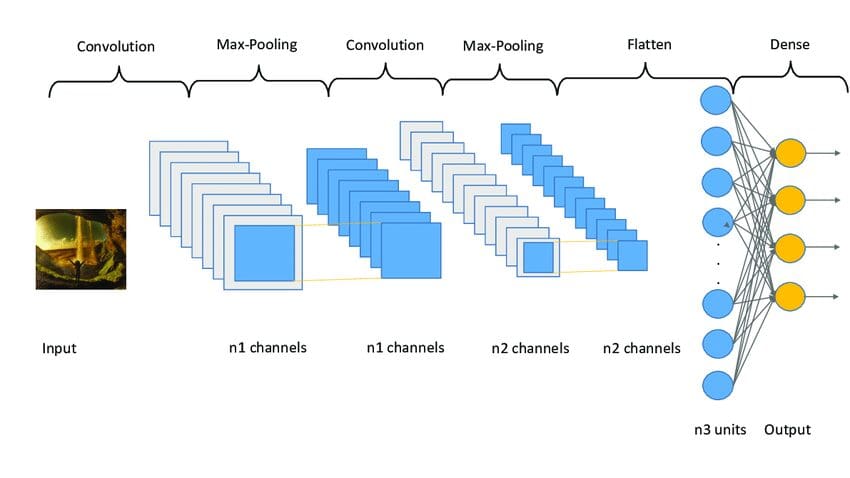

The “Keras updates” narrative is incomplete without addressing the Generative AI revolution. Keras has responded with KerasNLP and KerasCV, libraries designed to make modular components of Large Language Models (LLMs) and Vision Transformers accessible.

Local LLMs and Custom Architectures

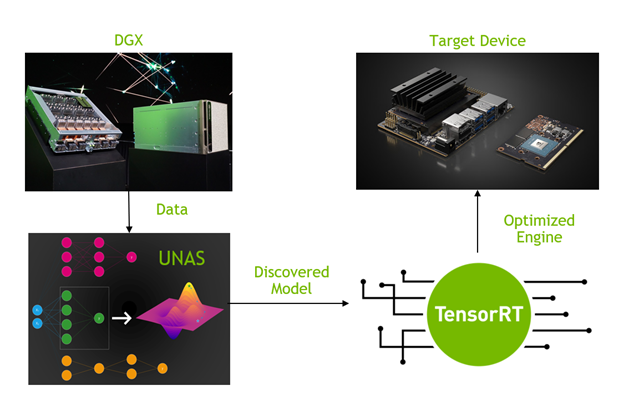

With the rise of Local LLM deployment and privacy concerns, running smaller, optimized models on edge devices is a priority. Keras 3 facilitates this by allowing developers to load weights from major architectures (like Llama or BERT) and fine-tune them using LoRA (Low-Rank Adaptation) efficiently.

Furthermore, the integration with LangChain updates and LlamaIndex news allows Keras models to serve as the reasoning engines within larger agentic workflows. Here is how you might implement a custom training step for a generative task, utilizing the Type hints and MyPy updates for type safety.

import keras

import keras.ops as ops

from typing import Dict, Any

class GenerativeTrainer(keras.Model):

def __init__(self, generator: keras.Model, discriminator: keras.Model, **kwargs: Any):

super().__init__(**kwargs)

self.generator = generator

self.discriminator = discriminator

self.gen_loss_tracker = keras.metrics.Mean(name="gen_loss")

self.disc_loss_tracker = keras.metrics.Mean(name="disc_loss")

@property

def metrics(self):

return [self.gen_loss_tracker, self.disc_loss_tracker]

def compile(self, d_optimizer, g_optimizer, loss_fn):

super().compile()

self.d_optimizer = d_optimizer

self.g_optimizer = g_optimizer

self.loss_fn = loss_fn

def train_step(self, data) -> Dict[str, float]:

# Unpack data

real_images = data

batch_size = ops.shape(real_images)[0]

# Train Discriminator

random_latent_vectors = keras.random.normal(shape=(batch_size, 128))

generated_images = self.generator(random_latent_vectors)

combined_images = ops.concatenate([generated_images, real_images], axis=0)

labels = ops.concatenate(

[ops.ones((batch_size, 1)), ops.zeros((batch_size, 1))], axis=0

)

with keras.GradientTape() as tape:

predictions = self.discriminator(combined_images)

d_loss = self.loss_fn(labels, predictions)

grads = tape.gradient(d_loss, self.discriminator.trainable_weights)

self.d_optimizer.apply_gradients(

zip(grads, self.discriminator.trainable_weights)

)

# Train Generator

random_latent_vectors = keras.random.normal(shape=(batch_size, 128))

misleading_labels = ops.zeros((batch_size, 1))

with keras.GradientTape() as tape:

predictions = self.discriminator(self.generator(random_latent_vectors))

g_loss = self.loss_fn(misleading_labels, predictions)

grads = tape.gradient(g_loss, self.generator.trainable_weights)

self.g_optimizer.apply_gradients(zip(grads, self.generator.trainable_weights))

# Update metrics

self.gen_loss_tracker.update_state(g_loss)

self.disc_loss_tracker.update_state(d_loss)

return {

"g_loss": self.gen_loss_tracker.result(),

"d_loss": self.disc_loss_tracker.result(),

}This code demonstrates the power of the unified API. The train_step logic uses keras.random and keras.ops, meaning this GAN training loop can run on TPU (via JAX), GPU (via PyTorch/TF), or even CPU with optimized backends.

Section 4: Best Practices, Optimization, and Security

As we move towards production, considerations shift from API flexibility to performance, security, and maintainability.

Performance Optimization: GIL and Compilation

Python’s Global Interpreter Lock (GIL) has long been a bottleneck for multi-threaded workloads. With the ongoing efforts regarding GIL removal (PEP 703) and Free threading in upcoming Python versions, Keras workflows that rely heavily on Python-side data processing will see significant improvements. Until then, leveraging JIT compilers is essential. JAX uses XLA by default, and PyTorch news highlights the torch.compile feature. Keras 3 automatically leverages these backend-specific compilation paths when model.compile(jit_compile=True) is set.

Code Quality and Security

In the era of Python security and Malware analysis, ensuring your ML code is safe is paramount. This includes securing the supply chain against malicious PyPI packages and ensuring model deserialization is safe (e.g., using safetensors where possible). For code quality, the Ruff linter and Black formatter have become industry standards. They ensure that the complex logic within your Keras custom layers remains readable and compliant with PEP 8.

Furthermore, testing ML code is critical. Using Pytest plugins specifically designed for tensor comparisons allows for regression testing of model convergence.

Deployment with Modern Web Frameworks

Once a Keras model is trained, it often needs to be exposed via an API. While Flask was the standard, FastAPI news suggests it is the new default due to its async capabilities and automatic documentation. For more complex, full-stack Python web apps, Litestar framework and Django async support provide robust alternatives. For internal tooling, Reflex app, Flet ui, and PyScript web allow data scientists to build interactive dashboards for their models without writing JavaScript.

# Example: Serving a Keras model with FastAPI

# Requires: pip install fastapi uvicorn

from fastapi import FastAPI

from pydantic import BaseModel

import keras

import numpy as np

app = FastAPI()

# Load model once at startup

# In production, consider ONNX runtime for faster inference

model = keras.models.load_model("my_model.keras")

class InferenceRequest(BaseModel):

data: list[float]

@app.post("/predict")

async def predict(request: InferenceRequest):

# Convert list to numpy array with correct shape

input_data = np.array(request.data).reshape(1, -1)

# Run inference

prediction = model.predict(input_data)

return {"prediction": float(prediction[0][0])}

# To run: uvicorn main:app --reloadConclusion

The updates to Keras represent a maturity milestone for the Python deep learning ecosystem. By decoupling the API from the backend, Keras has positioned itself as the universal interface for deep learning, capable of adapting to the rapid changes in PyTorch news, JAX developments, and TensorFlow updates. Whether you are working on Python quantum computing with Qiskit news, performing Malware analysis with deep learning, or building Python automation scripts using Playwright python and Selenium news for data gathering, Keras provides the modeling layer that ties it all together.

To stay ahead, developers should focus on mastering the keras.ops API, integrating modern data tools like Polars and PyArrow, and adopting rigorous engineering practices with tools like Ruff and Rye. The future of AI is backend-agnostic, and Keras is leading the way.